I’ve spent over three decades in IT Operations. Despite all the talk of transformation, many of the fundamental challenges remain unchanged, or have even worsened. The rise of modern DevOps and observability promised to revolutionize how we monitor and maintain systems, but in reality, we’ve simply scaled up the same old problems. More data, more dashboards, and more alerts haven’t led to better outcomes.

The core issue? Our approach to observability has been misguided. We need a paradigm shift from a bottom-up approach to a top-down approach. Instead of bottoms up collecting everything hoping we find insights in the data, we need to start with the purpose or the insights we are looking for and collect only the data that will help us to infer these insights.

Part 1: Looking Back at the Days of ITOps

From the early days of IT Operations, we have been focusing on keeping the lights on. At the early days, systems were monolithic, monitoring was rudimentary, and troubleshooting often involved sifting through log files for hours on end. A major incident meant a war room filled with engineers manually correlating data, trying to pinpoint the root cause of an outage.

As infrastructure grew more complex, the industry responded by layering on more tools, with each promising to make troubleshooting easier. But in practice, these tools often just created more dashboards, more logs, and more alerts, leading to information overload. IT Operations needed to evolve, which gave rise to modern DevOps, but we are still in the early innings of the game.

Part 2: The Problem Today with Observability

Observability was supposed to solve these challenges by giving teams a deeper understanding of their systems. The idea was that by collecting and analyzing vast amounts of telemetry – metrics, events, logs, and traces – organizations would gain better insights and respond to issues faster.

Instead, we’ve seen an explosion of complexity. Today’s observability landscape is defined by:

-

Too much noise: The sheer volume of logs and alerts makes it nearly impossible for engineers to separate meaningful signals from the flood of data.

-

Too many tools: Companies rely on a fragmented ecosystem of monitoring, logging, and tracing solutions, leading to silos and inefficiencies.

-

Too much manual troubleshooting: Despite all this data, engineers still spend most of their time manually diagnosing incidents, correlating logs, and responding to false positives.

The promise of observability has not been fully realized because organizations are still focusing on data collection instead of shifting their focus to intelligent data processing.

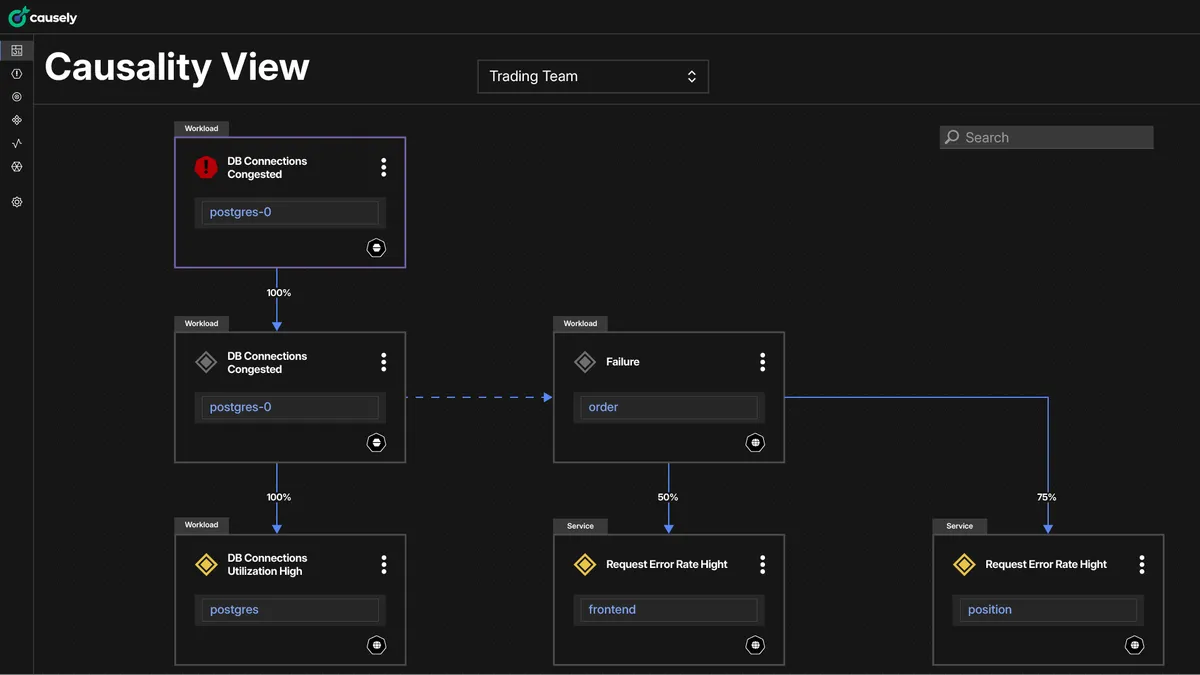

Part 3: Causely’s Paradigm Shift

At Causely, we believe that Observability must be disrupted and go through a paradigm shift from a bottom-up data collection to top-down purpose driven analytics that saves engineers from needing to spend hours sifting through data to figure out “the why behind the what.” We reject the notion that engineers will always need to spend time drilling through dashboards and making sense of the data coming from their tools. Instead, we believe that implementing the proper abstraction layer would allow systems to self-manage and take humans out of the troubleshooting loop. Instead of using tools that provide information for the engineers to analyze, the engineers should deploy systems that can actually make autonomous decisions.

This journey to autonomous service reliability is built on a number of core principles that I outlined in a recent blog. This shift from passively collecting data to relying on a system that actively interprets and acts on these insights is the future of observability.

Part 4: The Rise of AI and Agentic AI

We are on the cusp of a new era where Agentic AI can fundamentally reshape IT Operations. Instead of engineers reacting to alerts, AI-driven systems will proactively maintain service reliability, predicting and preventing failures before they occur.

I'm not talking about simple alerting rules or anomaly detection; I’m talking about true agentic AI that continuously analyzes system behavior and adapts in real-time to prevent service degradation.

Causely is positioned to lead this transformation, helping organizations shift from reactive troubleshooting to proactive, AI-driven service reliability. This isn’t just a step forward in observability—it’s a paradigm shift in how we think about IT Operations.

Conclusion

For 30+ years, we’ve been fighting the same battles in IT Operations, just at a larger scale. It’s time to rethink observability, move beyond endless data collection, and embrace a future where AI-driven automation ensures service reliability with minimal human intervention. Organizations that adopt this new paradigm won’t just reduce downtime and incident costs—they’ll free their engineers to focus on what truly matters: building the future.