On Monday, Delta had a no good, very bad, rotten day. Early in the morning, the company had a power outage in Atlanta that led to cascaded failures throughout its computer systems, affecting the airline’s operations systemwide.

"Following the power loss, some critical systems and network equipment didn’t switch over to Delta’s backup systems," the company said in a statement. Delta’s investigation into the root cause is still ongoing.

The outage caused widespread delays and led to the cancellation of about 1,000 flights Monday, but Delta was still able to operate 3,340 of its nearly 6,000 scheduled flights, according to a company statement. Another 250 flights were cancelled early Tuesday.

"We were able to bring our systems back online and resume flights within a few hours yesterday but we are still operating in recovery mode," said Dave Holtz, senior vice president of Operations and Customer Center, in a statement.

Delta is not the first airline to face widespread computer system failures, and it likely won’t be the last. In 2015, Quartz began tracking the tech glitches plaguing airlines and preventing them from operating normally. Since then, it has tracked 24 significant airline system failures.

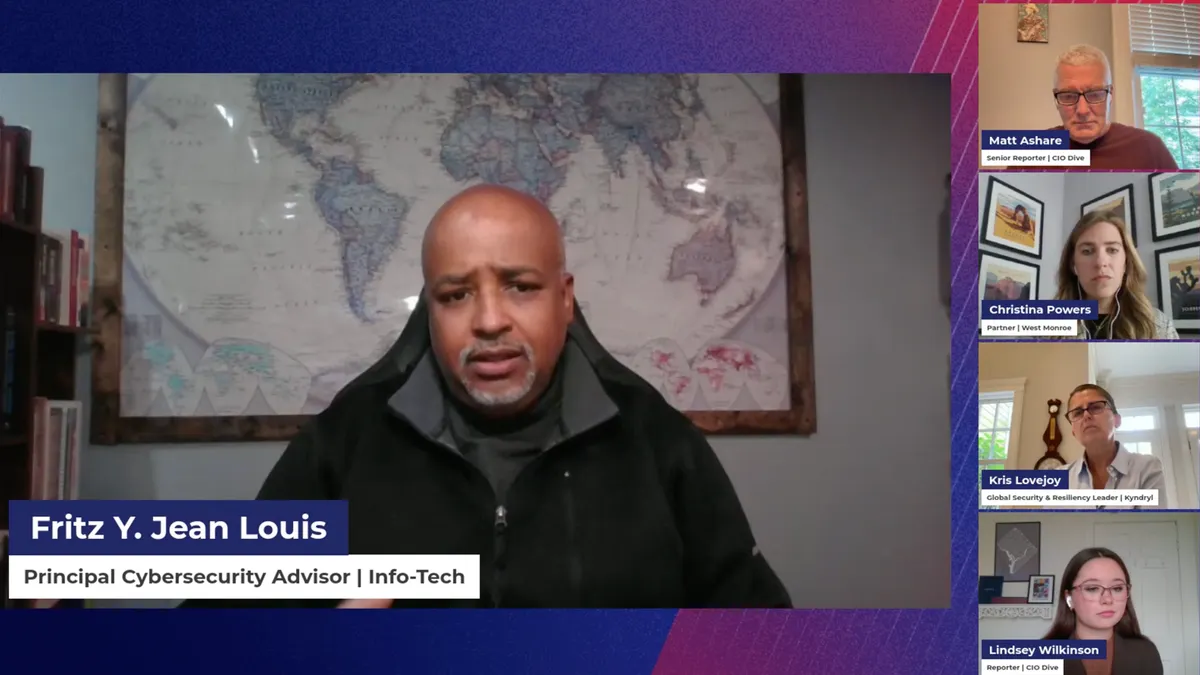

"Without knowing more about what really happened, you don't know whether it's a black swan event or whether this was a piece of carelessness or cost cutting or poor redundancy design," said John Parkinson, Affiliate Partner at Waterstone Management Group. But at the root of the problem,"there's a bit of system architecture issue going on here," he said.

It's an event that has led to a lot of down time and angry customers. Against the backdrop of so many similar delays in recent months, it raises the question about how airlines prepare for such issues — and how well they can deal with them. And for CIOs increasingly held responsible for a company's bottom line, there may be a few object lessons.

The outage affected Delta’s entire network because the companies control points are centralized. Though running route scheduling, ticketing and check-ins over a single network is cost effective, the system only runs smoothly while the central control points are available.

"As soon as one piece fails, you tend to get cascade failures," Parkinson said.

Companies could look toward regionally dispersed control centers, even though they're a bit more expensive, according to Parkinson.

"It's much harder to take the whole system down if there's that level of dispersion in its design," he said.

Redundancy, redundancy, redundancy

It is possible to avoid widespread system outages, but to do so, companies have to ensure redundancy measures are in place and fully operational.

"I know they had redundancy. Every big company has redundancy," said Zubin Irani, founder and CEO of cPrime. "My guess is the redundancy didn't work."

In data center design, there is usually dual redundancy put in place, with power coming from both the city and a generator that should turn on instantly in the event of a power failure. That generator basically serves as a "live battery pack" that keeps the data center running, according to Irani.

"Even if there was a power outage, you should not go down," Irani said. Because of a backup support grid, power should come back on almost instantaneously.

Since the systems did not seamlessly come back online, it is likely that something went wrong inside the data center, according to Irani. "On one hand you probably have either a design issue or you just have a failure in the backup system."

Even with the appropriate measures in place, companies cannot always guarantee they will work.

"If all of the network equipment that is required to connect your primary and your backup data center is impacted, it's possible that a failover didn't work the way it was designed to," Parkinson said.

Testing, testing, testing

Often organizations put redundancy measures in place for reaction in the event of a system failure, but once implemented, redundancy measures are often forgotten.

Companies "might test it when they put it in, but I guarantee you, I rarely see companies do annual testing on redundancy," Irani said. "Everyone will spend a lot of money building disaster recovery, building redundancy, but it is not a standard process for companies to do annual failover testing."

Organizations looking to keep systems online, even in the event of an emergency, would save a lot of time, energy and resources if they fully and regularly tested failover measures.

"These things could easily just not be working and you don't know about it until you have the failure," Irani said. "And no one tests it. So if you're not testing it, you kind of get hit by this."

"When I see these kinds of things happen, the biggest problem is people don't practice their recovery plans thoroughly enough," Parkinson said.

Rather than sitting down annually to plan responses for worst-case scenarios of system failures or other enterprise technology emergencies, companies often put disaster response to the wayside.

"If you're an airline, once a year may not be enough. Maybe you should do this once a quarter," Parkinson said. "You can imagine, that when something like this happens, panic is the first response. It's only training that stops the panic being a problem."