If your company is not embracing artificial intelligence, it is going to suffer. That's the message from all corners of the tech and business media world these days. CIO Dive is no exception. We've reported on the growing reliance on artificial intelligence everywhere from call centers to cloud computing to the airline industry.

Yet, the state of technology is still a long way from imbuing machines with human-level intelligence. It's even further from the merging of machine and humans that fans of the technological singularity believe will unlock our true potential and, eventually, immortality.

Despite the remarkable victory that Google's AI-powered Alpha computer scored against the world's top Go player, there is healthy debate around when machines will be able to truly attain human-like intelligence. That would mean a machine that could do more than just recognizing patterns and learning from mistakes, but also accurately responding to new information and understanding unstructured data.

Plus, it is no easy task to transfer a given type of AI from one application to another. For example, The Nature Conservancy wants to fight illegal fishing by using facial recognition software, running on cameras mounted over haul-in decks on fishing boats, to mark whenever an endangered or non-target species is brought aboard and not thrown back.

But it's not as simple as uploading a catalog of fish faces and pressing enter. Constantly changing light, variations in the orientation of the fish to the camera, and the movement of the boat all complicate matters. Kaggle, a code crowdsourcing platform, recently held a contest to incentivize coders to write software that addressed those variables.

Yet, the more pressing question around AI is not whether it has truly arrived, but whether the AI features vendors are trying to sell your company actually, and consistently, work as advertised. And if they'll meet your objectives.

Understanding AI

The healthcare industry stands to benefit significantly from AI. Wearable devices are being developed to track changes or patterns in a patient's vital signs that could signal an approaching cardiac event. When one is detected, a physician can be alerted automatically. Of course, that's very different than relying on technology to make an actual clinical decision.

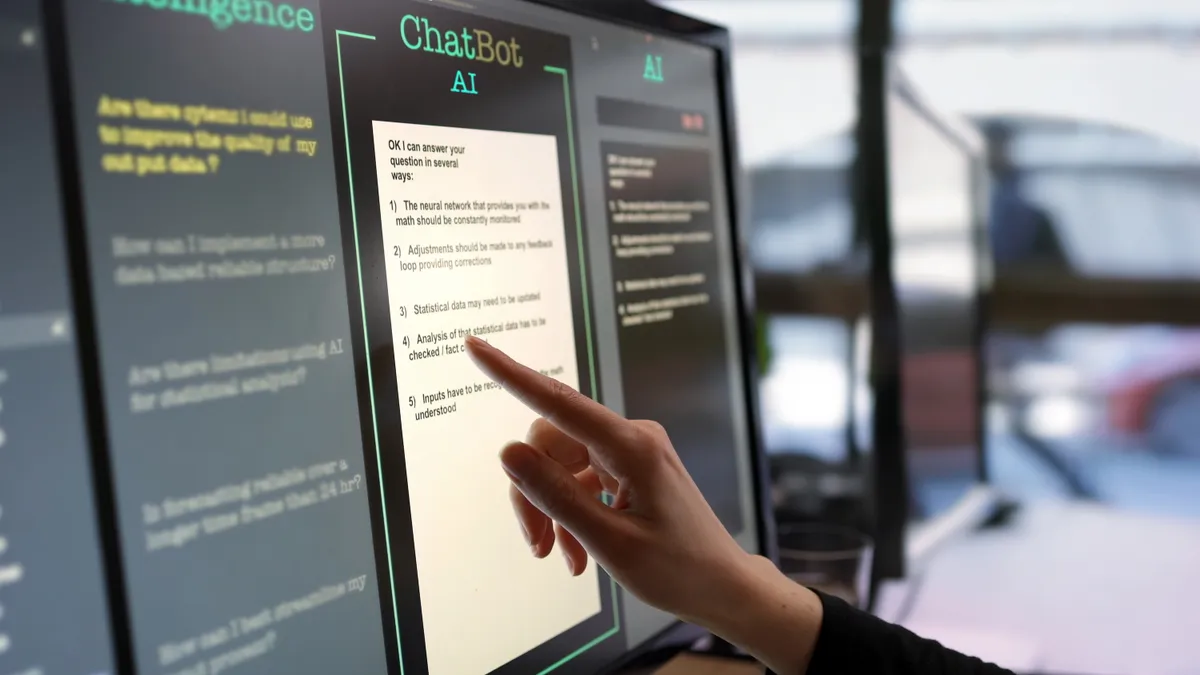

But a number of companies are starting to sell digital health assistants. This technology accesses a patient's medical records and analyzes input from the user to assess symptoms and provide a possible diagnosis. But why should we trust these platforms?

That's a question that Zoubin Ghahramani, professor of Information Engineering at the University of Cambridge, has spent a lot of time pondering.

We know that machine learning improves over time, as the software accrues more data and essentially learns from past events. So what if, Ghahramani and his team posit in a University of Cambridge research brief, we designed artificial intelligence with training wheels of sorts? Vehicles with autopilot mode, for instance, might ping a driver for help in unfamiliar territory, if the car's cameras or sensors are not capturing adequate data for processing.

But unless you've actually written the algorithms that power that autopilot, or any other piece of AI technology, it is not clear how the system reached a decision, or the soundness of that decision.

"We really view the whole mathematics of machine learning as sitting inside a framework of understanding uncertainty. Before you see data — whether you are a baby learning a language or a scientist analyzing some data — you start with a lot of uncertainty and then as you have more and more data you have more and more certainty," Ghahramani said.

"When machines make decisions, we want them to be clear on what stage they have reached in this process," he said. "And when they are unsure, we want them to tell us."

Last year, and with collaborators from the University of Oxford, Imperial College London, and at the University of California, Berkeley, Ghahramani helped launch the Leverhulme Centre for the Future of Intelligence.

One of the center's area of study is trust and transparency around AI, while other areas of focus include policy, security and the impacts that AI could have on personhood.

Adrian Weller, a senior researcher on Ghahramani's team and the trust and transparency research leader at the Leverhulme Centre for the Future of Intelligence, explained that AI systems based on machine learning use processes to arrive at decisions that do not mimic the "rational decision-making pathways" that humans comprehend. Using visualization and other approaches, the center is creating tools that can put AI decision processes in a human context.

The goal is not just to provide tools for cognitive scientists, but also for policy makers, social scientists, and even philosophers, because they will also take roles in integrating AI into society.

But by providing a means for making AI functions more transparent, commercial users of AI tools and their consumers could better understand how it works, determine its trustworthiness, and decide whether it is likely to meet the company's or its customer's needs.

A UL for AI?

The tech industry has begun collaborating around guiding principles to help ensure AI is deployed in an ethical, equitable, and secure manner. Representatives from Amazon, Apple, Facebook, IBM, Google and Microsoft have joined with academics as well as groups including the ACLU and MacArthur Foundation to form the Partnership on AI.

It seeks to explore the influence of AI on society and is organized around themes that include safety, transparency, labor and social good.

But a system for rating AI features and ensuring compliance with basic quality metrics — similar to how Underwriters Lab ensures appliances meet basic safety or performance measures — could also go a long way toward helping end users evaluate AI products.