Dive Brief:

- A new report from New York University's AI Now Institute says that the artificial intelligence (AI) industry has a "diversity crisis" across gender and race, one that could influence how AI systems are designed and implemented.

- According to the report, women are vastly underrepresented in the industry, making up just 20% of AI professors and 18% of authors at leading AI conferences. Likewise, companies have poor racial diversity; only 2.5% of Google's workforce is black, compared to 4% at Facebook and Microsoft.

- Noting that "bias in AI systems reflects historical patterns of discrimination," the AI Now Institute says that tech companies need to do more to diversify their ranks, including changing hiring practices, achieving pay equity and committing to transparency around hiring practices.

Dive Insight:

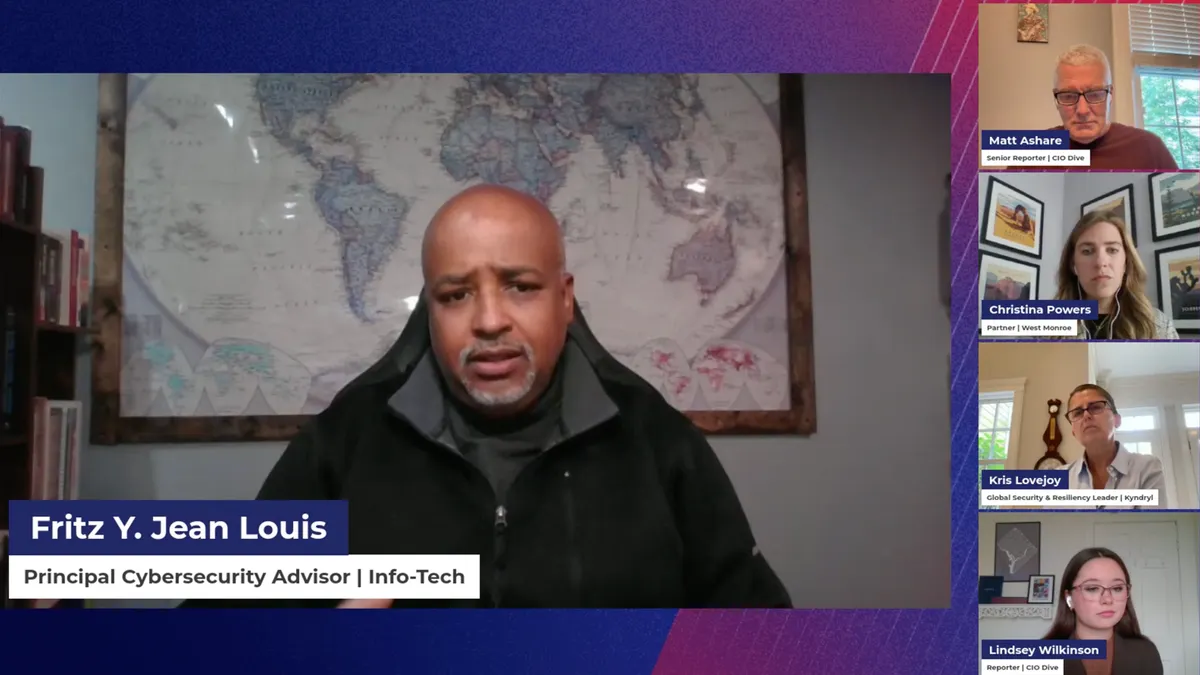

As governments and private companies have built AI systems into everything from criminal justice to mobility, there's been increasing attention to the potential biases built into the system.

The report notes that "AI systems function as systems of discrimination: they are classification technologies that differentiate, rank and categorize." Having a workforce and academic support system that is overwhelmingly white and male could bake in historical discrimination and biases from the start, the report warns.

That's especially been seen in the facial recognition field, where software has often struggled to differentiate or even identify people with darker skin. A March study of eight recognition systems built for autonomous vehicles found "uniformly poorer performance" for pedestrians with darker skin.

The authors also cast doubt on the industry's prior work to correcting diversity problems. "Pipeline studies," which focus solely on getting diverse candidates from school to the industry, have not changed the problem, and have not dealt with deeper problems like workplace culture, harassment and unfair compensation that can drive diverse candidates out of the field altogether.

Instead, the report authors say steps to increase transparency around hiring and realigning compensation that treats workers more fairly can help foster diversity. Likewise, they say more transparency is necessary around the development and testing of AI systems to avoid bias.

Governments have been trying to get ahead of potential AI bias to mixed results. New York City passed a bill that would create a task force to examine where automated decision systems could contain bias and recommend to agencies how to address it.

The Center for Government Excellence at Johns Hopkins University released a toolkit to help governments spot and avoid bias in AI. This month, U.S. Sens. Cory Booker, D-NJ, and Ron Wyden, D-OR, introduced a bill requiring certain algorithms be evaluated for bias. Still, the new report makes clear that fixing the problem will require more than government intervention — it will require a change in the industry at large.