Dive Brief:

- Google attached NVIDIA GPUs to its Preemptible VMs for half the price of comparable on-demand instances, according to a company blog post. Preemptible VMs were introduced in May 2015, and in 2016 Google added on Local SSDs at a cheaper price.

- Preemptible GPUs will make it more affordable for customers to use GPU power for distributed batch workloads. The arrangement is "a particularly good fit for large-scale machine learning and other computational batch workloads," according to the blog.

- Preemptible VMs and affiliated resources are subject to a maximum usage time of 24 hours and can be shut down following a 30 second warning. For customers running "distributed, fault-tolerant workloads that don't continuously require any single instance," these tradeoffs for a lower price may be worth it.

Dive Insight:

Building or running AI systems isn't cheap for the average company. With salaries for experts running well into the six-figure range and beyond, AI budgets can be hard to apportion.

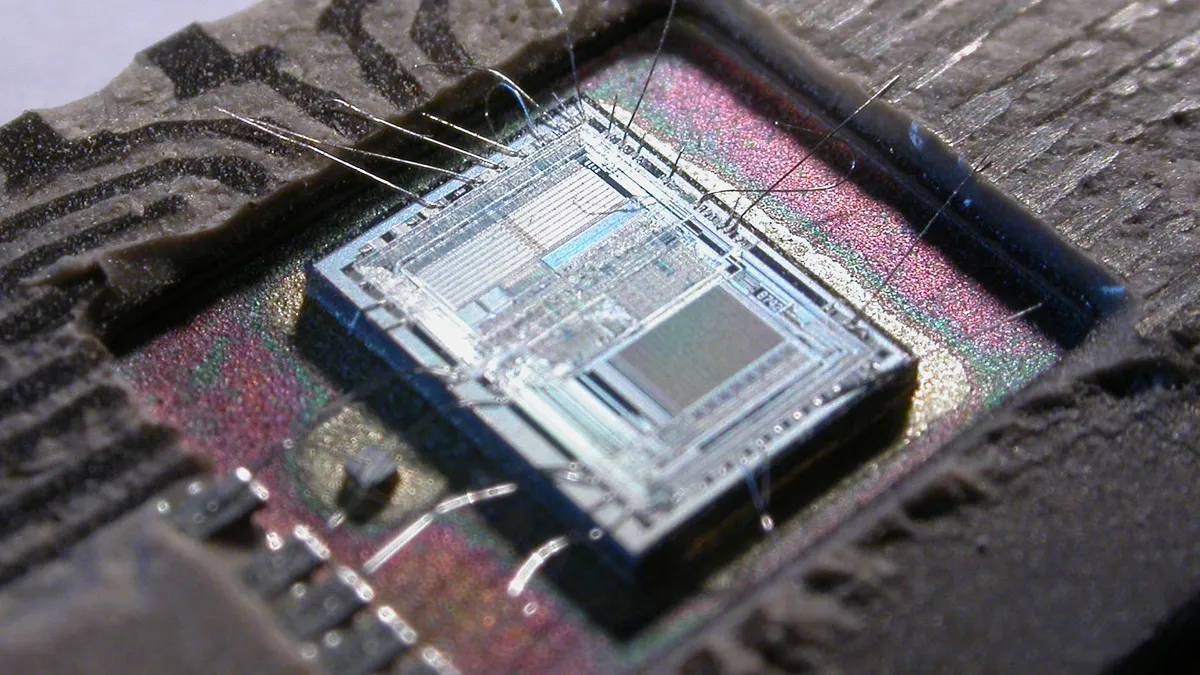

Hardware for computational processing is typically outsourced to save on costs. GPUs offer better speeds and processing times than dedicated hardware, which quickly piles on upfront and maintenance costs. Accessible tools, including processing hardware, are an important facet of AI and ML democratization.

An estimated 40% of companies have AI pilots or experiments in place, and only around 20% have AI deployed at scale or in a core business function. But with more affordable GPUs, more companies may find room in budgets and strategies to get POCs and test cases off the ground.