Dive Brief:

- Nvidia deepened its partnerships with the four major U.S. hyperscalers this week, the company’s Founder and CEO Jensen Huang said Monday, during a nearly two-hour GTC technology conference keynote.

- AWS, Microsoft Azure, Google Cloud and Oracle plan to embed Nvidia’s new Blackwell graphics processors in their infrastructure this year. AWS, Azure and Google Cloud will also deploy Nvidia’s inference microservices, called NIM, Huang said.

- “The whole industry is gearing up for Blackwell,” Huang said. “Every CSP is geared up. All the OEMs and ODMs, regional clouds, sovereign AIs and telcos all over the world are signing up to launch with Blackwell.”

Dive Insight:

Nvidia remains a GPU chip design and manufacturing powerhouse at heart. Despite its recent advances in enterprise software and cloud services, AI processing power is the force boosting the company's profile and revenue gains.

“Nvidia has a lead in terms of perception and performance and they have a lock on the top fabrication processes and a development platform that puts competitors at a disadvantage,” Alvin Nguyen, Forrester senior analyst, told CIO Dive.

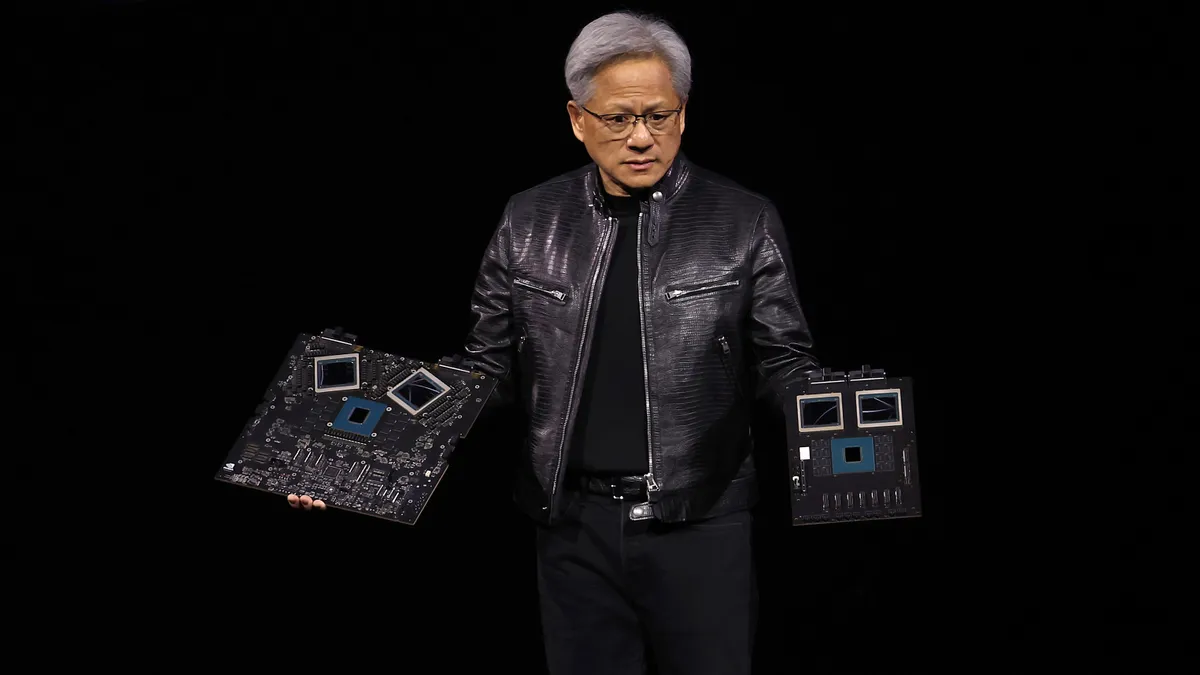

Huang unveiled the Blackwell B200 GPU on stage, along with the larger Grace Hopper GH200, dubbed a ‘superchip’ in a Monday announcement. The processors succeed the Hopper H100 and H200 series, chips already in high demand for AI compute operations.

“Blackwell is not a chip,” Huang said, likening it more to a platform. “People think we make GPUs and we do, but GPUs don’t look the way they used to.”

The processor’s architecture embeds up to 576 individual GPUs, the company said.

AWS will install the processors in EC2 instances to accelerate building and inferencing multitrillion parameter LLMs. Microsoft plans to offer Nvidia GPUs and microservices across Azure, Microsoft Fabric and 365 services.

Google Cloud will adopt the Grace Blackwell AI computing platform and NVIDIA DGX Cloud service. And Oracle plans install the processors across OCI public and private cloud infrastructure.

Rapid hyperscaler buy-in is more than symbolic, Nguyen said. “This move is necessary for the hyperscalers since NVIDIA is essentially the face of AI infrastructure.”