Dive Brief:

- Nvidia rolled out its Llama Nemotron large language model family Monday at the CES trade show in Las Vegas. Built using Meta’s Llama foundation models, the LLMs are trained to power enterprise-grade agentic AI solutions, the company said.

- The GPU giant also released a PDF-to-podcast blueprint for document summarization apps and is working within its partner ecosystem to create industry-specific blueprints for AI agents that automate customer support, fraud detection, supply chain management and other business functions.

- In a separate announcement, Nvidia partner Accenture launched an AI Refinery for Industry platform built using Nvidia’s enterprise software to ease agentic AI adoption. Accenture plans to develop more than 100 use cases by the end of the year and will make 12 agentic solutions available in February.

Dive Insight:

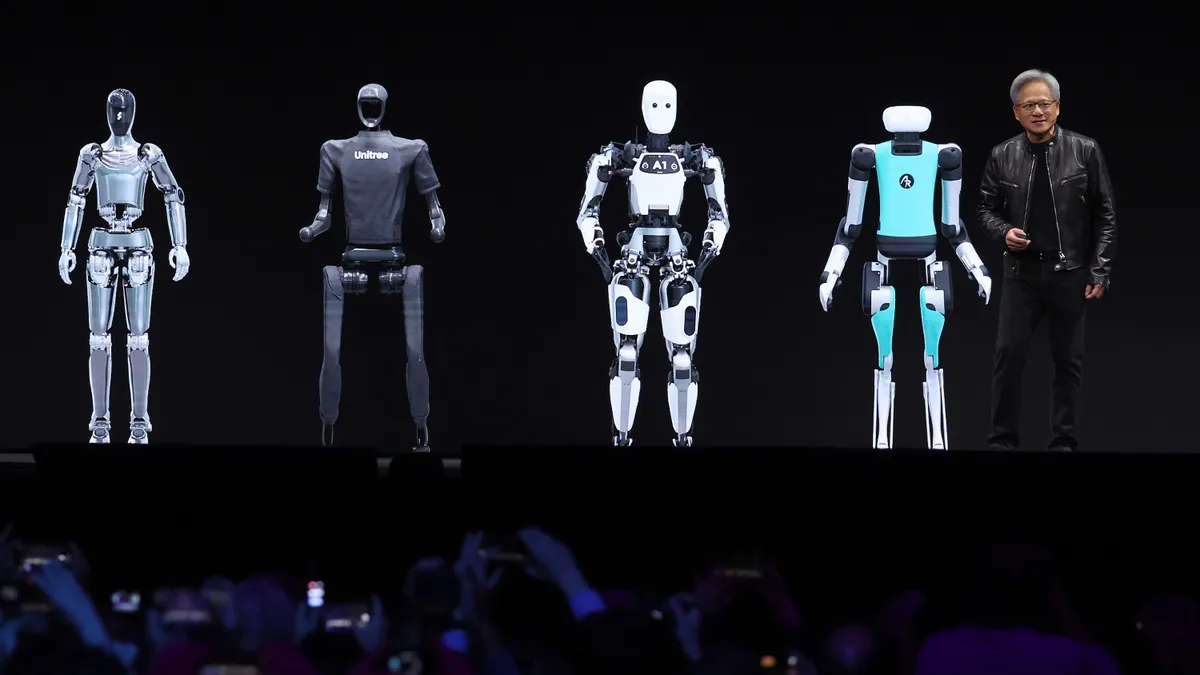

During the CES keynote Tuesday, Nvidia President and CEO Jensen Huang unveiled the company’s $3,000 Project Digits AI-PC, handled the latest GeForce RTX graphics card and summoned a phalanx of robotic companions. But the futuristic hardware framed a more immediate message about software, automation and enterprise AI use cases.

“The next giant AI service, period, is software coding,” Huang said. “Everybody is going to have a software assistant helping them code.”

As enterprises seek returns on AI investments, LLMs have moved beyond coding to tackle a broad range of business tasks.

AI agent capabilities proliferated in cloud in final months of 2024. Microsoft readied Copilot for automated solutions in November and AWS countered with an AI assistant designed to ease Window migrations the following month.

Google Cloud deployed customizable, AI-enhanced search and summarization tool Agentspace, also in December, and Deloitte signed on as early adopter. KPMG, another Big Four accounting and consulting firm, invested in AI start-up Ema in October and told CFO Dive it was incubating several agentic use cases, including an auditing assistant.

Nvidia’s agentic strategy is tied to partners across the tech landscape, Huang said during the keynote. SAP and ServiceNow are expected to be among the first providers to leverage the Llama Nemotron models.

“All of our Nvidia AI technologies are integrated into the IT industry,” Huang said.

Llama Nemotron models come in small, medium and large sizes for deployments on PCs and edge devices as well in private and public cloud. The large model is designed for data center applications and can be used to evaluate and tune smaller models, Huang said.