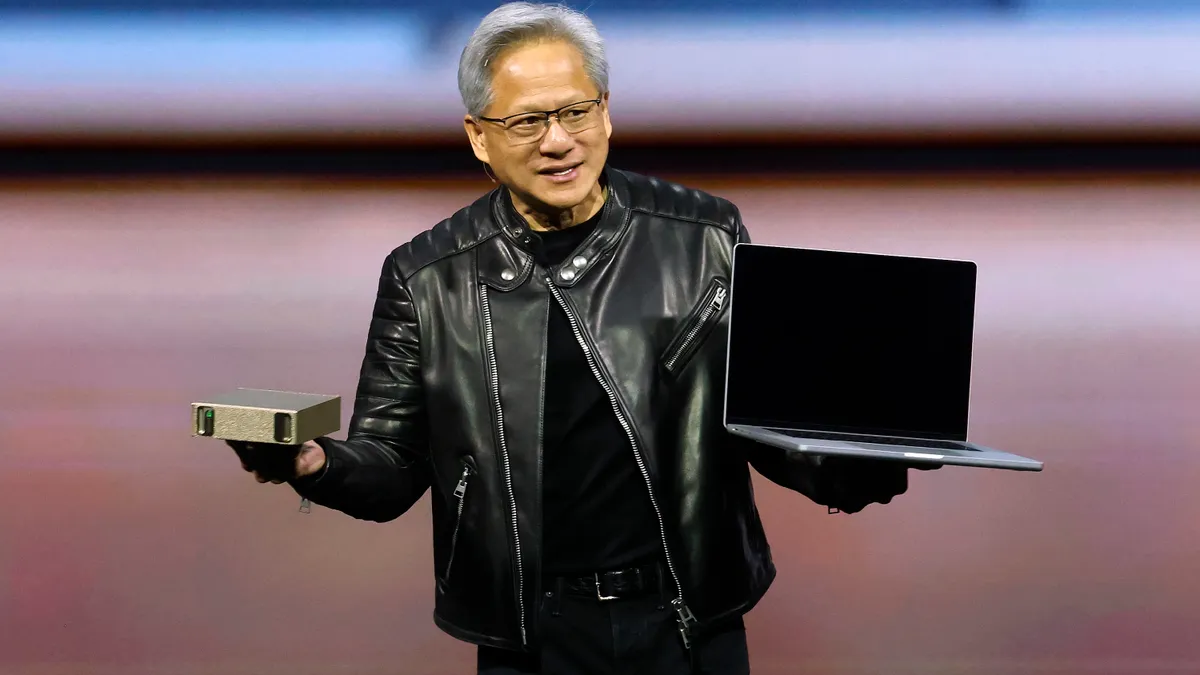

Nvidia CEO Jensen Huang enjoyed a victory lap Tuesday as the company convened its annual GTC conference. Of 25,000 in-person attendees, a select few were representatives of Nvidia's growing ecosystem of technology partners.

The GPU giant is leaning on allied providers to help spread AI usage and hardware consumption across industries.

“We have a whole lineup for enterprise now,” Huang said. “These will be available from all of our partners.”

In the race to train more capable large language models and deploy smarter generative AI technologies, Nvidia has been a clear winner. The company’s quarterly revenues skyrocketed over the last two years, surpassing in its most recently completed quarter the $27 billion reported for its full 2023 fiscal year.

The rapid ascent “speaks to how pervasive generative AI is becoming,” Forrester Senior Analyst Alvin Nguyen said. “Everybody's wishing they could be in Nvidia’s shoes at this point.”

Several key technology providers tightened their orbit around Nvidia Tuesday.

Accenture rolled out an agent-building platform built on Nvidia technology and said it would leverage the chipmaker’s new family of Llama Nemotron reasoning models to develop up to 100 industry-specific agents this year. Nvidia partnered with Accenture, Deloitte, Microsoft, SAP and several other companies to deploy the models.

“Accenture has partnered with Nvidia since 2018 but our relationship has certainly accelerated over the past year due to the demand for generative AI in the enterprise and Nvidia’s growing enterprise software capabilities,” Tom Stuermer, senior managing director and lead of the Accenture Nvidia business group, said in an email.

Oracle also jumped on the agentic bandwagon. The cloud and software provider deployed an Nvidia microservices software integration to help enterprises build cloud-based agentic applications Tuesday. Oracle and Nvidia are collaborating on deployment blueprints to help enterprises provision cloud compute services for AI workloads, the companies said in a joint announcement.

IBM fired up Nvidia H200 GPUs in its cloud and said it plans to integrate its watsonx AI platform with Nvidia microservices Tuesday. IBM is one of several technology providers signed on to deploy a GPU-powered Nvidia data platform with AI query agents, according to a separate announcement.

“All these partnership announcements are carrying the message of what Nvidia does well out to enterprises” Nguyen said. “They’re speaking in the language that enterprises want to hear in order to make that new technology palatable for enterprise.”

The agentic shift

As big tech turns to task-specific autonomous agents to help sell enterprises on generative AI’s business value, Nvidia is eying its next revenue stream.

“The amount of computation we need at this point as a result of agentic AI, as a result of reasoning, is easily 100 times more than we thought we needed this time last year,” Huang said Tuesday. “I expect data center buildouts to reach a trillion dollars, and I am fairly certain we're going to reach that very soon.”

Analysts agree. Dell’Oro anticipates AI consumption will drive data center capital expenditures beyond $1 trillion annually by 2029, as hyperscalers continue pouring massive amounts of capital into capacity buildouts.

In addition to supplying cloud providers with GPUs, Nvidia is pursuing a growing segment of AI revenues generated by enterprises that aren’t part of the company’s traditional customer base — including the finance and healthcare industries, retail and manufacturing.

“Nvidia's growing role in the enterprise IT space is a paradigm shift, signaling the importance of AI as an integral part of any IT strategy,” Scott Bickley, advisory fellow at Info-Tech Research Group, said in an email. “Nvidia has migrated from being a hardware provider to an AI enabler, driving enterprise transformation.”

Partners like Accenture and IBM are crucial enterprise connection points for Nvidia. They represent a pathway into the enterprise space for a company that just a few years ago was best known for its gaming chips.

“Enterprises and folks that serve enterprises, like IBM and Accenture, are very focused on what AI is going to do for the lives of their companies,” Jack Gold, founder and principal analyst at J.Gold Associates, told CIO Dive. “These partners are working with big IT shops, and they know that they are going to have to deploy AI capabilities, not just in the cloud, but also within their services and on-prem.”

Nvidia’s fortunes remain tethered to the big cloud providers and the AI compute resources they continue to stockpile. AWS, Microsoft and Google Cloud have already signaled their intentions to spend heavily on AI infrastructure through the end of the year. Oracle said earlier this month it plans to double its capital investments.

As enterprise AI strategies mature and agentic capabilities gain traction, Nvidia hardware will land in enterprise data centers and private clouds, too.

“We’re moving from an era of mostly AI in AWS, Azure and Google Cloud to an era where more and more companies are going to bring AI servers in-house,” Gold said. “It might not say Nvidia on the box, but you’re going to have increasing amounts of Nvidia hardware installed mostly as part of somebody else's systems.”