As cloud revenue growth regained momentum this year, the $10 billion Microsoft forked over to OpenAI for generative AI in January 2023 has started to seem like small potatoes.

Since firing the first salvo in the battle to capture enterprise AI ambitions, the tech giant has opened its war chest to expand its Azure infrastructure empire, committing tens of billions of dollars to amass the resource the technology craves — cloud storage and compute.

“The pressure is on,” Alistair Speirs, Microsoft’s director of Azure global infrastructure, told CIO Dive. “This is no longer the experimental phase of the cloud.”

Speirs directs data center strategy with a focus on what he called “the easy problems” — reliability, sustainability, resilience, security and data sovereignty.

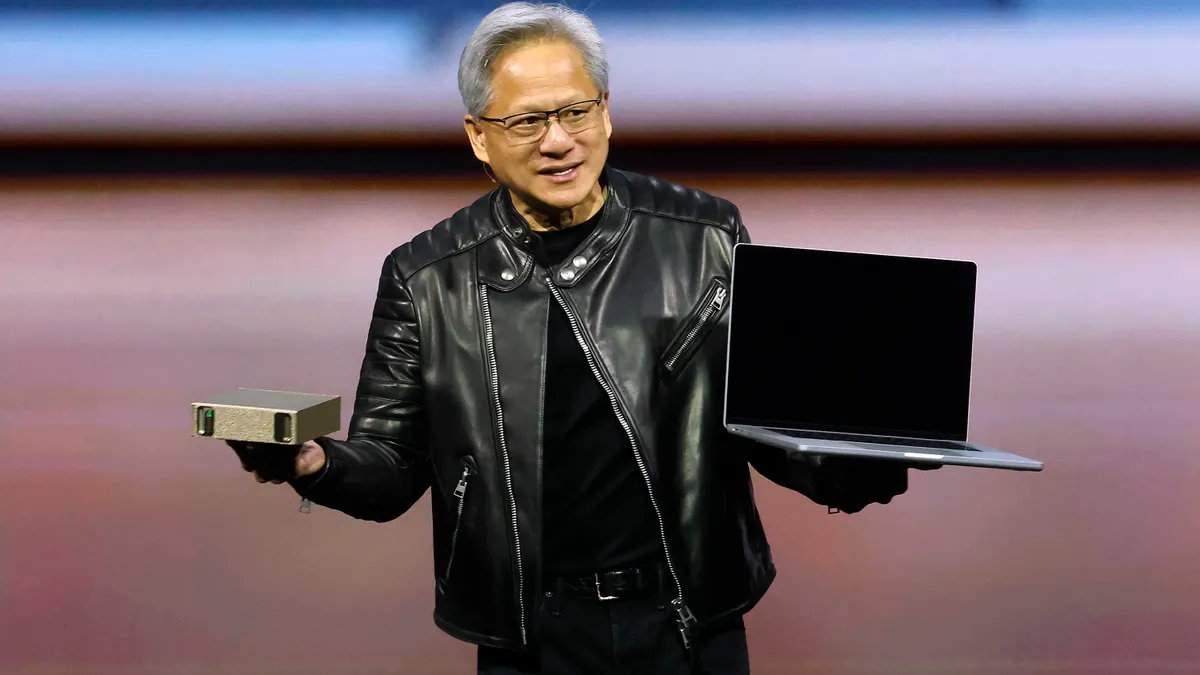

Building, embedding and supporting enterprise-grade large language model technologies — the coding assistants, chatbots and summarization tools already in production — adds new challenges. To prepare for AI compute, Microsoft is building out capacity while installing servers outfitted with graphics processing units and other high-powered chips.

“AI is another workload that's coming into the data center,” Speirs said. “It has some unique requirements that change the types of servers you need and the power requirements as well but is essentially part of that continuum. It is giving us another data point to accelerate.”

The acceleration has gained momentum in recent months.

Microsoft’s $3.3 billion commitment to build cloud infrastructure and AI-related initiatives in Wisconsin, announced at a ceremony attended by President Joe Biden in May, is part of a broader, global strategy underway.

In just the last six weeks, Microsoft opened its first data center in Mexico, announced a $1 billion data center package for Kenya, invested 4 billion euro ($4.3 billion) in France and expanded its Azure footprint in Indonesia, Thailand and Malaysia.

“We have been doing what is essentially capital allocation to be a leader in AI for multiple years now,” CEO Satya Nadella said, during the company’s Q3 2024 earnings call in April.

The AI leadership push is reflected in Microsoft’s spending patterns. The company reported $14 billion in capital expenditures for the three-month period ending March 31, including infrastructure and leases to support cloud demand. That’s nearly double the $7.8 billion in capital expenditures the company reported during the same period last year.

CFO Amy Hood said the spending went toward satisfying demand for cloud and was likely to increase as the company deepens AI infrastructure investments.

“We continue to bring capacity online as we scale our AI investments with growing demand,” Hood said, noting near-term demand surpassed available capacity.

Building compute

Data center facilities house the engines that drive cloud business. Optimizing servers for AI workloads is just the latest infrastructure challenge.

Microsoft learned a lot about ramping up capacity during the pandemic years, Speirs said. But data centers are, by definition, strategic investments. They can take years to build and require small armies to run.

“These are complex infrastructure projects,” Speirs said. “They require a lot of people and it’s not just the servers that go into them, but also the construction effort, the planning, the sustainable siting of the land, the permitting and renovating — all of that has to happen.”

The hyperscaler business model was built on supplying enterprise compute resources as efficiently as a public utility. Scalable, service-based infrastructure allowed customers to fuel digital transformation without adding costly and complex on-prem estates.

“These are the new railroads for the 21st century,” Gordon Dolven, director of Americas data center research at CBRE Group, said. “If you want to deliver a service, whether it's streaming or content or messaging on an application, it requires data centers."

Infrastructure expansion was already in the cards, as hyperscalers built capacity in order to keep pace with cloud adoption across industries. Despite last year’s macroeconomic headwinds, enterprise cloud consumption drove an 18% year-over-year increase in global cloud spending, according to Gartner. This year, the analyst firm expects 20% growth, with LLM technologies helping to drive global cloud spend close to $700 billion.

As consumption of cloud resources and AI compute grows, efficiency remains a crucial feature.

“We have a nice strategic altruism alignment here of making sure that any electron used in our data center is billable,” Speirs said. “That is driving a lot of investment in how we get more efficient, more sustainable and reduce one of our largest operational expenses.”

Azure’s AI boost

The race to deploy generative AI capabilities has already augmented Microsoft’s cloud business.

Azure revenue jumped 31% year over year during the first three months of 2024. AI services provided a big assist, Hood said during the earnings call, adding seven percentage points of revenue growth to Azure and other cloud services.

The revenue bump gave Microsoft sufficient momentum to capture one-quarter of the global cloud market for the first time. The company’s two main rivals — AWS and Google Cloud — also saw revenue growth increase as they held onto 31% and 11% of the cloud market, respectively.

Microsoft remains the second largest hyperscaler behind AWS

Microsoft’s gradual ascent has mostly been at the expense of smaller cloud providers, not AWS or Google Cloud, John Dinsdale, Synergy Research Group chief analyst and research director, said.

An infrastructure arms race

Microsoft isn’t operating in a vacuum. AWS and Google Cloud are also doubling down on infrastructure build outs to capture AI business and market share.

AWS has more or less matched Microsoft’s cadence with data center build outs. Amazon’s cloud division will spend $11 billion on cloud data centers in Indiana, the company announced in April, on top of the $10 billion the company committed to multiple complexes in Mississippi at the start of the year and $7.8 billion pledged to expand infrastructure in Ohio last summer.

Amazon’s capital expenditures for AWS were $14 billion during the first three months of the year. That number is expected to increase over the next three quarters, Amazon CFO Brian Olsavsky said during Amazon’s April earnings call.

Google Cloud’s publicly announced infrastructure expansions have been more modest in scope. The company pledged $3 billion in data center investments in April — $1 billion for Virginia and $2 billion for Indiana — and committed $1 billion to building a Missouri data center in March. But its Q1 capital expenditure bill nevertheless amounted to $12 billion.

The building frenzy has already eaten up capacity in the private market, according to CBRE’s analysis of North American data center trends during the second half of 2023.

While supply grew 26% year over year in the data center rental and colocation market, the vacancy rate was at a near record low of 3.7%. As new capacity ramps up, an estimated 83% is already spoken for, CBRE said.

Big cloud providers, along with companies in the technology, financial services and healthcare sectors, are consuming the lion’s share of available capacity, Dolven said.

“Historically, these companies may have thought about pre-leasing six, nine or 12 months in advance,” Dolven said. “Now we’re seeing more and more interest in getting ahead of the curve.”

The enterprise bill comes due

The hyperscaler spending spree offers a glimpse into cloud’s AI-driven business model: build capacity, embed generative AI capabilities, cover the cost on the back end as enterprise consumption of infrastructure and services grows.

“Net new revenue is going to offset the cost of doing business,” Gartner VP Analyst Sid Nag said. “That premium that you're going to pay — that the CIO is going to pay — will essentially absorb the cost of these multibillion-dollar data centers.”

Just as LLM builders depend on cloud for compute resources, hyperscalers are counting on generative AI applications to consume and pay for the infrastructure the technology is reshaping.

“Compute and storage are the most expensive parts when it comes to cloud costs,” Tracy Woo, Forrester principal analyst, said. “Now customers are asking for supercharged compute, which can be really expensive.”

The inherent advantage of running generative AI in cloud is that enterprises don’t have to foot the bill for up-front infrastructure investments to work with the technology, Woo said. The hyperscalers are providing customers with the optimized infrastructure.

“That's pretty much been the value prop of cloud for the last seven to eight years,” Woo said.

The benefits of internal process efficiency gains, faster time to market with new products and services and overall business growth offset the downstream cost to the enterprise over time, the thinking goes.

Generative AI’s cost-per-usage expense is likely to come down, as well, as hyperscalers optimize data center operations, chip technologies become more energy efficient and enterprise-grade models get smaller and more contained.

As global cloud infrastructure capacity doubles over the next four years and more than 100 new facilities come on line annually, smaller data centers are becoming more common, Dinsdale said, citing SRG market analysis.

A dispersal trend can help mitigate environmental costs, an additional boon to enterprise customers as emissions regulations tighten.

“Rather than just expand existing data centers, we're adding new regions,” Speirs said. That way, Azure can locate data centers closer to customers to satisfy data sovereignty and latency reduction requirements, and the company can situate new facilities near renewable energy sources.

As AI systems become more efficient, the build out strategy may need adjustment, David Linthicum, enterprise technology analyst at SiliconAngle and TheCube, cautioned.

“The hyperscalars may be overbuilding in anticipation of capacity that many AI systems, if architected properly, may not need,” Linthicum said. “Enterprises are going to get much better at optimizing any use of AI, for much less infrastructure than they thought they initially needed.”

Speirs isn’t worried.

“As we look forward, expect AI in every app and in every service,” Speirs said. “There will be a time relatively quickly when it will be kind of silly to have an AI strategy because it won't necessarily be something distinct or different.”