Dive Brief:

-

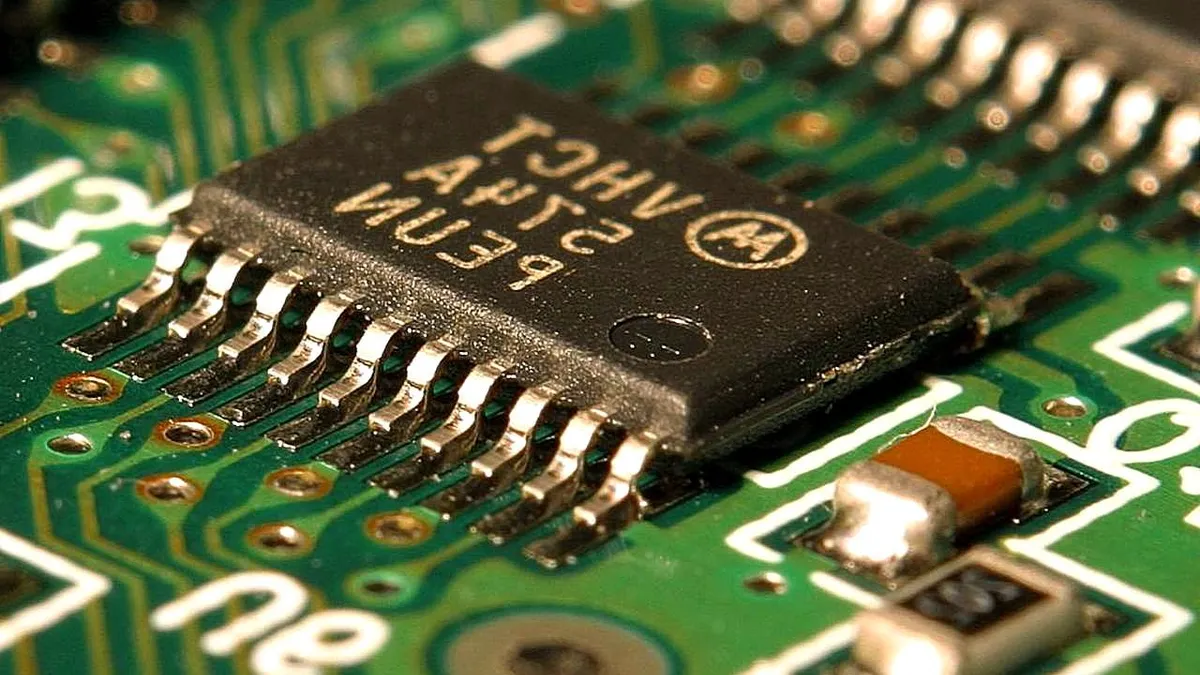

Google CEO Sundar Pichai introduced the company's new Cloud TPU chips Wednesday during Google I/O in California. The chips are specially designed for machine-learning research, according to Geekwire.

-

"One of our new large-scale translation models used to take a full day to train on 32 of the best commercially-available GPUs — now it trains to the same accuracy in an afternoon using just one eighth of a TPU pod," wrote Jeff Dean and Urs Hölzle in a Google blog about the chips.

-

Collections of the TPUs, which Google calls "TPU pods," can deliver 11.5 petaflops of processing power, Geekwire reports. The TPUs are available for Google Cloud Platform customers now.

Dive Insight:

Companies like Google, IBM and Intel know AI and machine learning hold huge potential for future profits. But cognitive computing applications also require enormous amounts of processing power, and companies know they can't get one without the other.

At the moment, there's a race to see who will get there first. Just this week, Hewlett Packard Enterprise (HPE) introduced a new computer prototype that contains 160 terabytes of memory.

For Google, producing computer chips is new territory, but one it appears comfortable in. And it makes sense for Google, which employs some of the most talented engineers in the world, to create a chip solution instead of paying a competitor, or potential competitor. And unlike machine learning related chips announced recently, Google's TPU chip is available for purchase now.