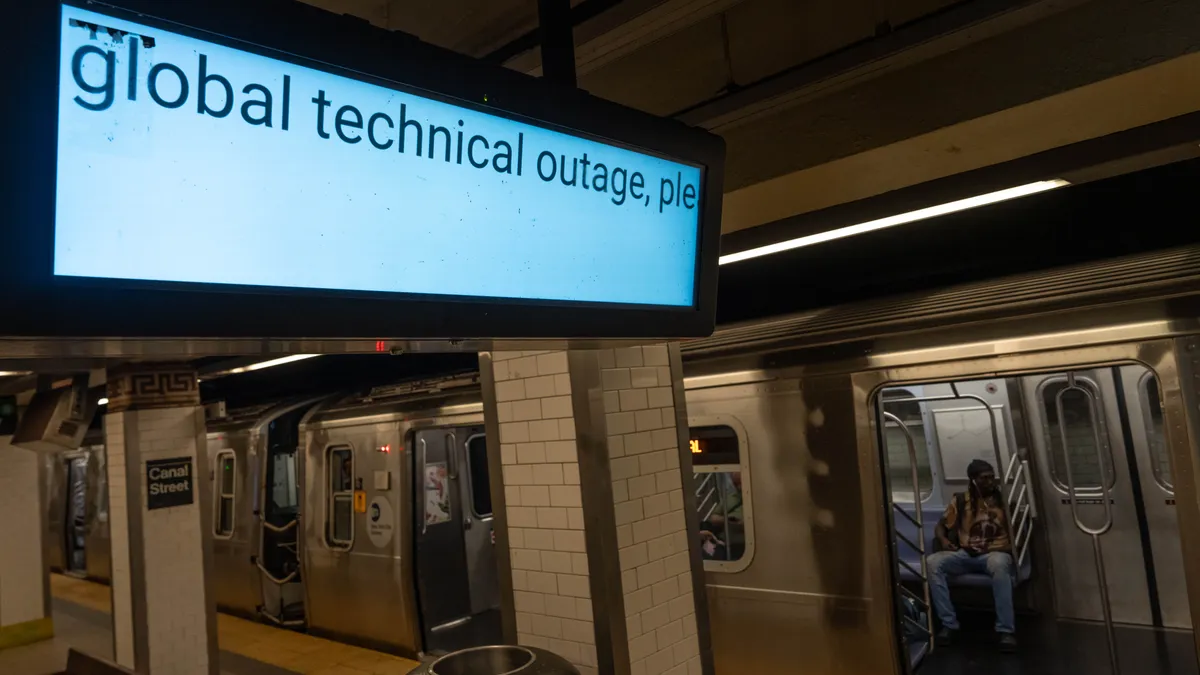

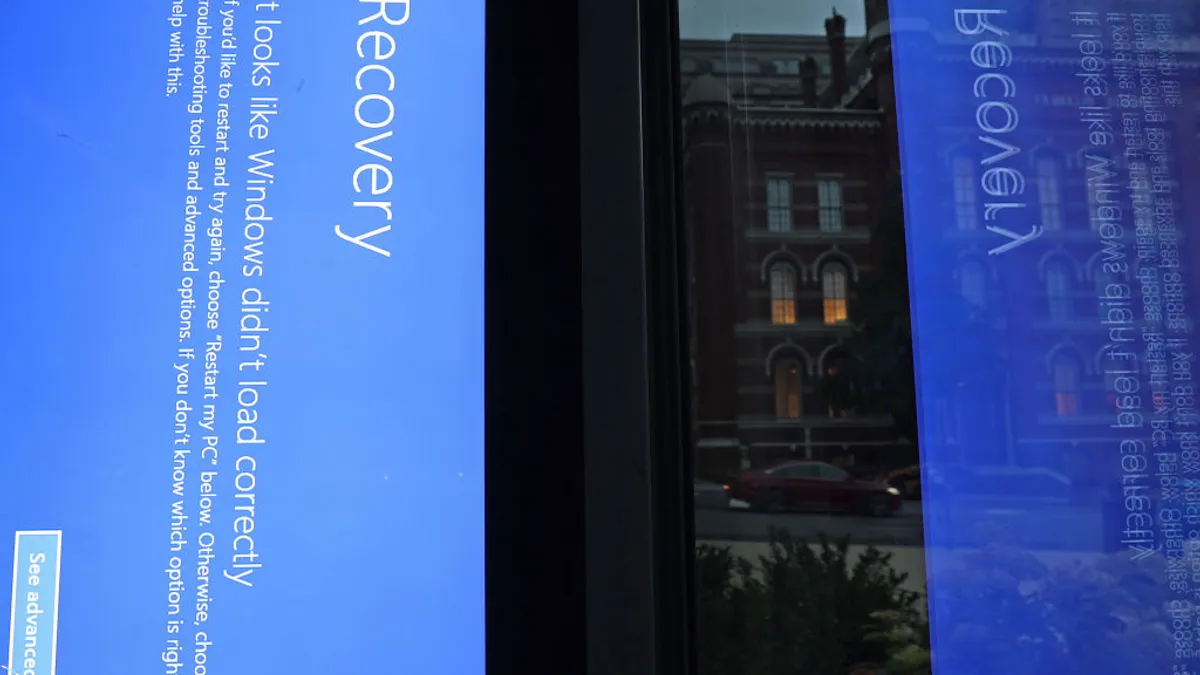

Businesses around the world screeched to a halt on July 19 after millions of Windows computers crashed, flashing the dreaded blue screen of death. The culprit: a software update in security vendor CrowdStrike's platform, which led to hours of disruption for some — and days for others.

The IT outage grounded airlines across the country and crashed banking apps, showing the tangible effects a bad piece of code can have in any IT-dependent operation.

In the aftermath of the CrowdStrike outage, analysts stressed to CIOs and other tech leaders the need for closer scrutiny over automatic software updates. Part of the problem is a steady push toward broader IT automation and the industry's disproportionate reliance on centralized vendor updates.

"There's been an almost crazed drive towards automation of SaaS over the last five years," said Phil Fersht, CEO and chief analyst at HFS Research.

"This is a big, big wake up call to the whole IT industry's overreliance on a blind trust that everything's just going to be upgraded," said Fersht. "Fairly small code issues can cause massive ramifications as we've just seen."

The faulty update that triggered CrowdStrike's global outage was live for little more than one hour, but automated updates amplified its reach. Fortune 500 companies grapple with financial losses from the outage, surpassing $5.4 billion, according to one estimate.

"I think it's just been a little bit of complacency," Fersht said. "Too much trust in big tech that, as long as we buy Microsoft, for example, everything's going to be fine."

Automation frustration

The CrowdStrike outage showed the consequences of a critical software failure landing in widely adopted solutions by way of automatic updates.

Automating IT updates slowly grew in popularity, driven by the availability of package-manager utilities in Unix and later Linux, said Charles Betz, research director at Forrester. Centralized patch management soon emerged for laptop fleet management, and then Microsoft's move to cloud-based solutions like Microsoft 365 provided a watershed moment.

"I think the convenience has been seductive," Betz said.

Without proper quality assurance mechanisms, blind trust in automated vendor updates can lead to widespread issues in critical systems.

"Automation replicates a result quickly and consistently without regard for the virtue of that result," said John Annand, research director at Info-Tech Research, in an email. "A bad change propagates just as fast as a good change."

IT downtime, no matter the root cause, can impact operations and fuel customer frustration. It also comes with a high sticker price: downtime costs U.S. businesses more than $400 billion per year, according to Splunk data.

It's up to technology leaders to stave off the effects of future faulty software updates by putting safeguards in place, according to Annand. As IT organizations look to AI and automation, the outage highlighted the need for internal checks and balances, Annand said.

Preventing the next big one

Analysts have flagged the importance of risk mitigation techniques such as canary deployment — preliminary rollouts under controlled conditions prior to broader deployments.

Following the outage, CrowdStrike announced it is taking steps to restore customer confidence, such as adding additional validation testing and releasing new updates through a staggered deployment strategy.

"Quality assurance and regression testing are critical," said Jen Kling, Microsoft global partnership director at TEKsystems. "You cannot just blindly trust whatever updates are being pushed out."

Executives must consider whether critical systems and applications should adhere immediately to release cycles or if it's pertinent to delay updates until their operational safety can be confirmed.

"When you do your business continuity and your disaster recovery planning, you have to think about how quickly you accept those" updates, said Kling. "There were a lot of companies that immediately accepted what was pushed out."

In the post-CrowdStrike world, a shift in perspective is already underway.

"There's going to be, and already is, a greater level of stringency around testing upgrades," Fersht said. "You can do digital twin models, more and better use of synthetic data, better testing of these things before they occur."

Matt Ashare contributed to this story.