Few advances in banking offer the promise — or pitfalls — that artificial intelligence does.

AI has the power to deliver new efficiencies, insights and safeguards for financial institutions. Many banks have turned to AI-based phishing detection apps to fight cybercrime, which has cost the global economy up to $400 billion, according to Bonnie Buchanan, a finance professor at the University of Surrey in the United Kingdom.

Robo-advisers are quickening the pace of transactions and replacing human staff in wealth management. And voice and language processing are reducing service times and customer frustrations in the filing of simple insurance claims, said Jesse McWaters, an analyst with the World Economic Forum.

Algorithmic lending is also helping companies like Los Angeles-based ZestFinance raise loan production without raising delinquency or default rates.

"Customers using our machine-learning underwriting tools to predict creditworthiness have seen a 10% approval rate increase for credit card applications, a 15% increase for auto loans and a 51% increase in approval rates for personal loans — each with no increase for defaults," ZestFinance CEO Douglas Merrill told members of the House Financial Services Committee in late June. Buchanan and McWaters also testified at the hearing.

While machine learning is helping increase credit access for low-income and minority borrowers, the data points, or combinations thereof, that AI uses in its models can also show an unconscious bias along racial lines, witnesses said.

"African-Americans may find themselves the subject of higher-interest credit cards simply because a computer has inferred their race," Nicol Turner Lee, a fellow with the Brookings Institution, told lawmakers. "If we do not [create fairer models], we have the potential to replicate and amplify stereotypes historically prescribed to people of color and other vulnerable populations."

Too many data points

The problem is multilevel, witnesses said.

First, machine-learning underwriting models may have too many data points by which to measure potential borrowers. They may help financial institutions tailor products to certain individuals.

But they can also make critical and potentially lasting inferences based on a user's social media accounts, online purchases and web browser histories, Turner Lee said.

"Consumers give so much data that there are no start and stop points," she said. "The inferences that come out of that data [are] what's troubling. That leads to those unintended consequences."

At times, the use of alternative credit data in lending profiles has turned out positive. Online lender Upstart last week reported a 27% increase in loan approvals in the past two years and a decrease in the accompanying interest rate when using such data in its model.

Rep. Bill Foster, D-IL, pointed to a working paper published by the University of California, Berkeley, that found algorithmic lending models discriminated 40% less than face-to-face lenders for mortgage refinancing loans.

But as data points vary, so can results.

Another lawmaker at the hearing, Rep. Sean Casten, D-IL, said he built an algorithm in the private sector to try to predict energy consumption.

It worked "beautifully," he said. "But the more granular I got, the more inaccurate it was."

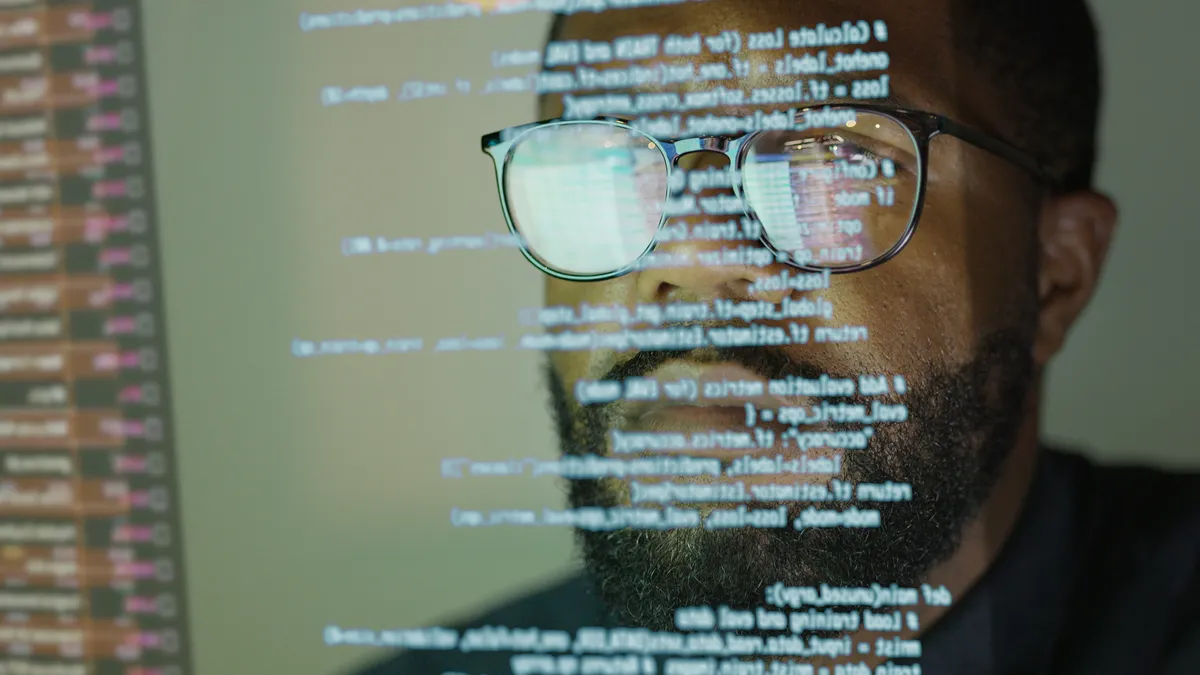

The 'black box' conundrum

To "fix" an algorithm — to see how an outcome changes — pairs of data points, or signals, need to be isolated, Merrill said. And the combinations can be infinite.

"If you have 100 signals in a model, which by the way would be a very small model, you'd have to compare all hundred to all other hundred, which sounds easy, except that turns out to be more computations than there are atoms in the universe — which is a bad outcome if you want an answer," he said.

That results in a "black box" model, where those who operate — or even create — the system can't adequately explain or verify what they do.

"Lenders know only that an ML model made a decision, not why it made that decision," Merrill said.

Bias may not be the machine's fault, but it would help if the people creating AI were more diverse, Turner Lee said.

"It's not the algorithm that's saying to themselves, 'I'm going to be biased today,'" she said. "It's who we are as a society and who is actually inputting that data to create what has to be considered the garbage-out variables."

Merrill put it another way.

"There's bias [in non-AI models] because white men have traditionally dominated credit roles, so the backdata is a bad representation of the world," he said. Today, "most ML models are produced by the proverbial white guy in a hoodie."

Who's liable?

Questions remain as to who is legally liable in cases of bias: the financial service provider or the software developer.

"What does disparate impact mean when collective groups of people are denied loans, credit, or some form of equitable opportunity simply because a computer was wrong?" Turner Lee asked.

Regulators, too, have been slow to offer guidance on banks' use of artificial intelligence.

Federal Deposit Insurance Corp. Chair Jelena McWilliams said this month that if agencies can't agree on a path forward, "I'm willing to take the risk of the FDIC being the outlier in this space."

Turner Lee suggested regulators offer companies incentives for following diversity best practices, adding that companies should check that they comply with nondiscrimination practices before an algorithm's release "rather than clean up the mess at the end."

Clarity is the most important thing regulators and policymakers can bring, Merrill said. "Even clarity that is not perfect is better than uncertainty to get companies to innovate in a good way."