At the stroke of midnight, the day of giving thanks quickly turns to a long weekend of consumerism, from Black Friday and Small Business Saturday to Cyber Monday. Retailers and e-commerce players are pushing the boundaries of those periods in both directions, turning the spending days into a spending craze.

For many shoppers, gone are the days of waiting in cold lines all night and packing into stores with hundreds of other shoppers. With a simple search and click, all the benefits of the year's biggest shopping weekend are available on laptops and mobile devices.

Yet as one nuisance disappears, another emerges: Site crashes and server outages, the chilling plagues of e-commerce.

On the front lines of this battle are developers and engineers; up for grabs is the attention, loyalty and income of millions of customers. An hours-long outage could cause a business thousands, hundreds of thousands, even millions of dollars.

To manage the increasingly complex sites and partner ecosystems supporting e-commerce platforms, IT teams have to manage development, monitoring, logging, scaling, flagging and more. Preparing such a high traffic event also means preparing for failure.

As businesses enable autonomous processes, more crashes and outages are prevented. But no platform, no matter how big, is ever perfectly safe from the service and traffic demands of holiday consumers.

Running through the technology ecosystem maze

Remember when a page stalls or the site goes down that on the other end of those frantic pushes of the refresh button sits a developer — stressed, busy and trying their best to get that shopping cart of holiday presents back to the customer.

There are five common problems that cause holiday e-commerce outages, according to Catchpoint: Overburdened APIs, sluggish third-party components, sites loaded with graphic components, servers ill-equipped for peak traffic and a failure to look at performance at the regional level.

Typically the DevOps team babysits deployments, and if something goes wrong they have to jump in and resolve — a process that could take hours or even days, according to Steve Burton, VP of SMarketing at Harness.

The web of technologies and vendors the team has to juggle can be extensive. One option to help mitigate problems in the development pipeline is a layer of management software that sits across processes to help DevOps teams gain more control and transparency over what is happening in their system.

Burton breaks the DevOps process into seven steps: Build, test, deploy, run, monitor, manage and notify. Each process has a variety of vendors that can be tied to it, creating more system complexity to manage.

After building and testing a system, a business will deploy and run it. Cautious teams might also choose to use a canary deployment, sending different versions to unique segments of end users, according to Burton.

With this method, they can compare metrics such as conversion rates and revenue gains across subsets. If a decrease in conversion shows up in one version, the business can roll that one back and move to a different version with more success.

But getting an application up and running is just half the battle: Next comes monitoring, feedback and managing.

In the past, a development team only got a call if something failed or went down and they had to troubleshoot. With DevOps, teams are more plugged in to a continuous feedback loop and the entire lifecycle of a product, Burton said.

No team — or platform — is an island

Partner ecosystems are a natural breeding ground for vulnerabilities in an organization's system.

Like a highway, an accident or construction in one area can cause tremendous impact 10 miles away — and the countless hours of manual labor it takes to fix the problem can only exacerbate it further, according to Todd Matters, co-founder and chief architect at Rackware.

IT teams need to understand how unusual traffic or behavior on their platform will affect vendors and partners, and vice versa.

How a service provider provisions its customers can affect how much a traffic spike will affect normal operations. If a provider sets up a specific hardware allocation or custom configuration for a customer, unusually high traffic could cause problems because the customer is only provisioned for a certain amount, according to Steve Newman, founder and CEO of Scalyr.

In a pooled service this could be less of a problem because customers are grouped together and pulling from the same server resources.

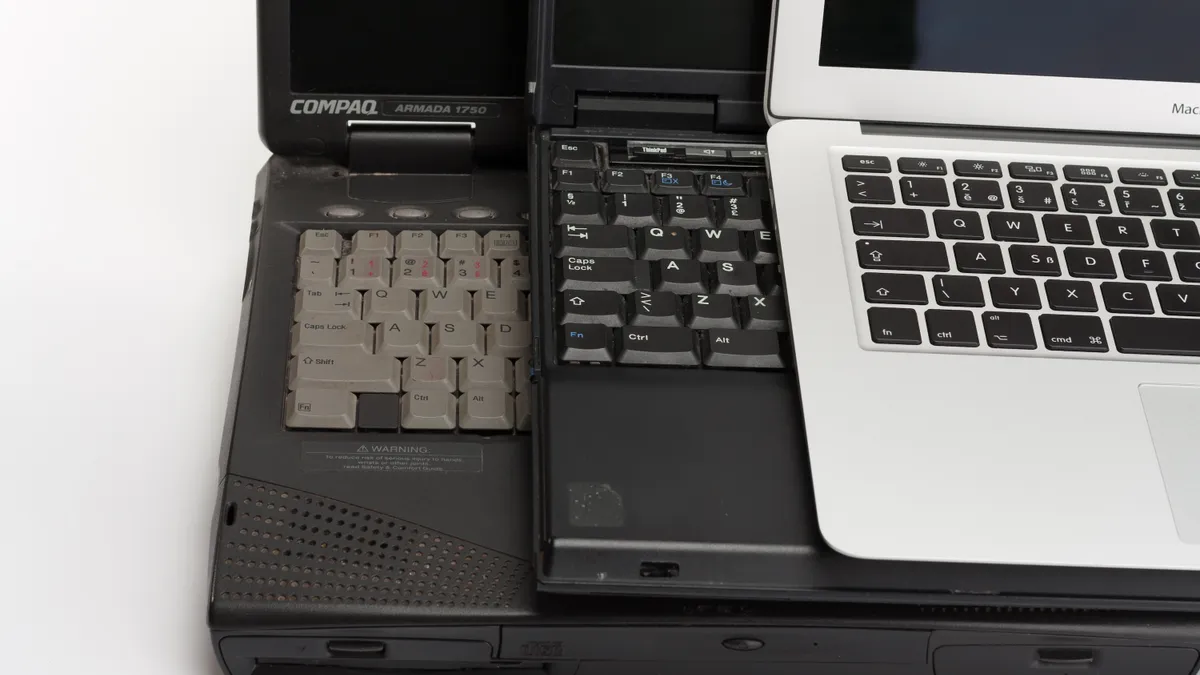

Below the surface of a system, more pieces are also at play thanks to the rise of cloud platforms and microservices.

Microservices allow developers to deliver more innovation and features in parallel tracks at a faster pace, but they also introduce variety to the mix, according to Burton. Instead of one code base a business has to test, there could be 10 to 50.

Each microservice might be written by a different developer, which means some might be tested more thoroughly than others.

When a business actually deploys, just one or two microservices in a mix of 100 could cause significant upstream and downstream impacts, potentially causing a system failure, he said.

In the last few years, sites have become increasingly sophisticated and complex with recommendations and targeted advertising, one-click checkout and send-to-mobile options, Newman said. But these complex features create more places for problems to arise.

Something will always go wrong

No matter how well a company tests every individual part of its system, it can never test everything.

Imitating a model like Netflix's chaos engineering, where production is frequently broken to uncover weaknesses and force teams to deal with a system failure, can help e-commerce teams prepare for the holiday shopping spike, Burton said.

"You never know where the limit is going to be until you hit it," Newman said. There are three questions every business needs to ask themselves going into high traffic days and events:

-

What could go wrong?

-

Would you know if something went wrong, and how?

-

How would you fix that?

The second question is the one that really helps a business prepare to have the appropriate monitoring in place and probe out potential complications on the big day, he said. What metrics will highlight the problem, and how will you know localize the problem to solve it quickly.

Businesses shouldn't just focus on negative metrics such as how long it takes a site to load though, according to Newman.

Monitoring the customer experience on a day like Black Friday or Cyber Monday by looking at new signups, visitors and sales can quantify successes and provide key behavioral metrics to understand the customer. And many system problems are likely to tie in to customer-facing applications.

Assuming things will fail and having a strategy in place for if that happens is just as important as assuming things will run smoothly, Burton said. Automating deployment, health checks, verification and roll back processes can facilitate the resiliency and recovery process.

Many businesses still use a lot of manual monitoring and intervention, which can take hours, sometimes even days. Automated capabilities can take a matter of minutes.

Cloud resources can be quickly provisioned quickly and configured, and autoscaling workloads can prove crucial in scenarios where workload demand suddenly increases 10- to 20-fold, according to Matters. If a group of servers exceeds 85% of utilization, a trigger can alert the system to set aside more bandwidth.

Then, when traffic drops back to a steadier state later on, the system can deprovision some of those workloads to save money.

Beneficial as they may be, autoscaling capabilities are not very widespread among e-commerce players. Many organizations have only implemented 20-25% of what they could, according to Matters.

This means a lot of manual monitoring and intervention is still at play during high traffic events like holiday shopping.