Editor's note: This article draws on insights from a CIO Dive Live conversation between Associate Editor Lindsey Wilkinson and Principal Financial Group's Kathy Kay. You can watch the session about finding success in AI here on-demand.

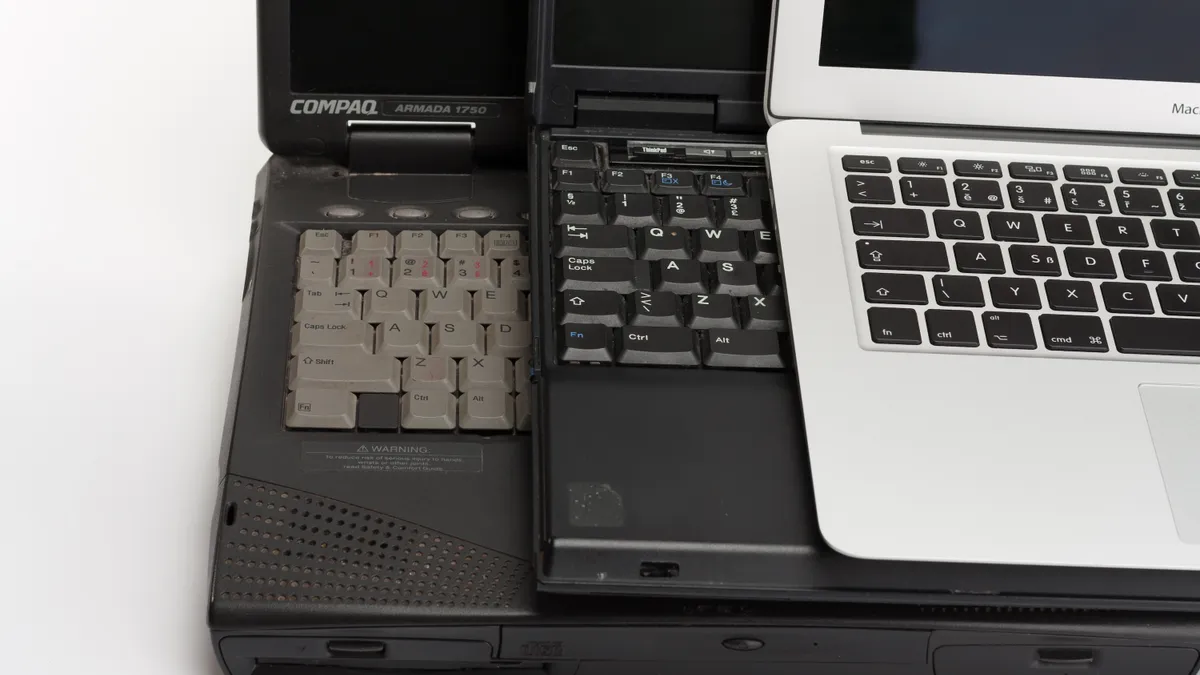

When OpenAI unleashed ChatGPT last fall, Principal Financial Group EVP and CIO Kathy Kay and her team weren’t caught off guard. Like many tech-focused companies, the financial services provider had extensive experience with previous iterations of AI, ML and automation technologies.

The organizational infrastructure was already in place, Kay said during a CIO Dive Live event Wednesday.

“We've actually been leveraging AI and machine learning for quite some time — the more traditional models,” Kay said. “We actually have teams that help build the models, support the models, data engineers that help wrangle the data, machine learning engineers that help create the models and take them into production.”

Emerging technologies challenge IT leaders to react quickly, but with caution and consideration. The rapid proliferation of generative AI tools intensified pressure to mobilize in search of enterprise use cases.

Cloud eased the way, providing companies like Principal Financial Group with readymade models to test and tune.

“We started with our cloud providers,” said Kay. “Quite frankly, they were available. It was closest to where our data was. The use cases we've been trying out have leveraged models that we can already take advantage of.”

The AI marketplace

Cloud providers jumped on generative AI as an opportunity to rejuvenate revenue growth and spur new usage after a year in which customers focused on optimizing existing deployments.

Microsoft infused ChatGPT throughout its suite of SaaS products shortly after the model was introduced and has continued to deploy new tools, including an AI-infused electronic health records solution announced in July.

AWS and Google Cloud deployed their own LLMs and partnered with other generative AI solution companies to establish multiple-model marketplaces for enterprise customers.

Other providers have been quick to respond, too.

IBM launched generative AI as a managed service through its watsonx platform and announced an AI-enabled coding assistant trained in more than 55 programming languages in August. Snowflake introduced an LLM capable of ingesting legal documents through its data cloud solution in June. And Salesforce rebranded its CRM platform earlier this month as part of an AI push encompassing its Einstein Copilot coding assistant.

Off-the-shelf solutions accessible via cloud APIs provide a testing ground for potential generative AI use cases without the expense and technical resources required to build models in house. The array of tools on the market has covered Principal Financial Group’s needs.

“We haven't yet had a use case in our list where we would have to build the model from ground up,” said Kay.

From testing to use cases

As generative AI reached the enterprise, Principal Financial Group went into testing mode, drawing both on internal tech expertise and stakeholders from outside of IT, Kay said.

“We have a group of about 70 people across the company who have expressed interest and passion for generative AI. It started kind of as a study group,” said Kay. “Now we have this huge cross-functional team that's created all kinds of ideas and we’ve been working together to test a lot of them out.”

aCloud gave Kay’s team the flexibility to test generative AI’s potential broadly before settling on specific business applications of the technology.

“We’ve been lucky that we've been on this journey to cloud so that we can quickly create secure sandboxes that people can leverage,” Kay said, adding, “You’ve got to make sure that you’ve got the right data and that the data is accurate and that there is no bias.”

Assuring data integrity isn’t a new concern, but its importance has intensified. “We had to do this with traditional AI and machine learning models as well,” said Kay. “But with generative AI, it's even more so.”

Kay has teams of engineers working in tandem with business partners to assess the value and risk of various use cases and she favors a flexible development strategy.

“What I didn't want to do at the very beginning is start imposing some sort of governance and rules as we were learning — that's the worst you can do,” said Kay. “It'll stifle innovation.”

Ingesting and summarizing large documents, improving call center automation and assisting coders are three of the most promising use cases Kay’s teams have tested. Conducting live and post-conversation analysis of agent calls with customers is another.

Currently, Kay is prioritizing ideas that have broad business applications over use cases that offer narrow solutions. But she’s not locked into any one strategy.

“If we talk in six months, I might tell you, ‘We've learned since then, and we've changed our approach to how we prioritize and find use cases because we're continually learning,’” said Kay, adding, “We'll adjust as we learn.”