Editor's note: The following is a guest article from Samuel Bocetta, a former Department of Defense security analyst and technical writer focused on network security and open source applications.

When it comes to software bugs and security flaws, Microsoft has long been a victim of its own success.

The firm employs so many software engineers and publishes so much code, that traditional methods for identifying weaknesses in its software are of limited effectiveness. That's why, as Microsoft announced in April, the company is turning to machine learning (ML) models to categorize identified bugs.

The system is 99% accurate when it comes to separating bugs that have security implications from those that do not, Microsoft said.

The announcement could have huge consequences for the way in which AI is used in cybersecurity. Some of the largest AI acquisitions of recent years have been in the security field, but the real-world application of ML models to threat intelligence has so far been extremely limited.

There are also some pitfalls to using AI or at least relying on it to replace the expertise of experienced security professionals.

AI and ML to the rescue?

The scale at which Microsoft works is dizzying. As Scott Christiansen, a senior security program manager at Microsoft, told The Verge recently, the firm has "47,000 developers [who] generate nearly 30,000 bugs a month."

Assessing the severity and implications of thousands of bugs a month is a huge challenge. Christiansen said his team's goal was to build an ML system that is able to classify bugs as related to security or not, and also assesses their level of severity, with an accuracy comparable to that of a security expert.

The focus of the program is primarily to sort identified bugs into those that have implications for system and network security, and those that don't. The ML system has been trained using nearly 20 years of historical data across 13 million work items and is reported to be highly accurate — Microsoft claims its ML engine gets it right 99% of the time.

The detection rate represents a major success story for ML techniques. Up until now, and despite the hype, AI and ML technologies have struggled to find real-world, genuinely useful applications.

Their tangible uses have largely been confined to content generators and customer service chatbots. Even in these contexts, they have sometimes caused more harm than good.

Bots, especially, are one of the more popular topics in business technology right now but not necessarily for the best reasons. Though some have been successful, the ones that make headlines do so because they're largely monumental failures.

Nevertheless, Microsoft's approach is refreshing. It is rare for a large company to detail how many bugs they generate, and still less how many of these give rise to crucial security vulnerabilities.

The Microsoft team behind the model have published an academic paper which gives a high level of detail on how the system works, and plan to make the ML methodology they have used freely available through GitHub in the coming months.

The limitations

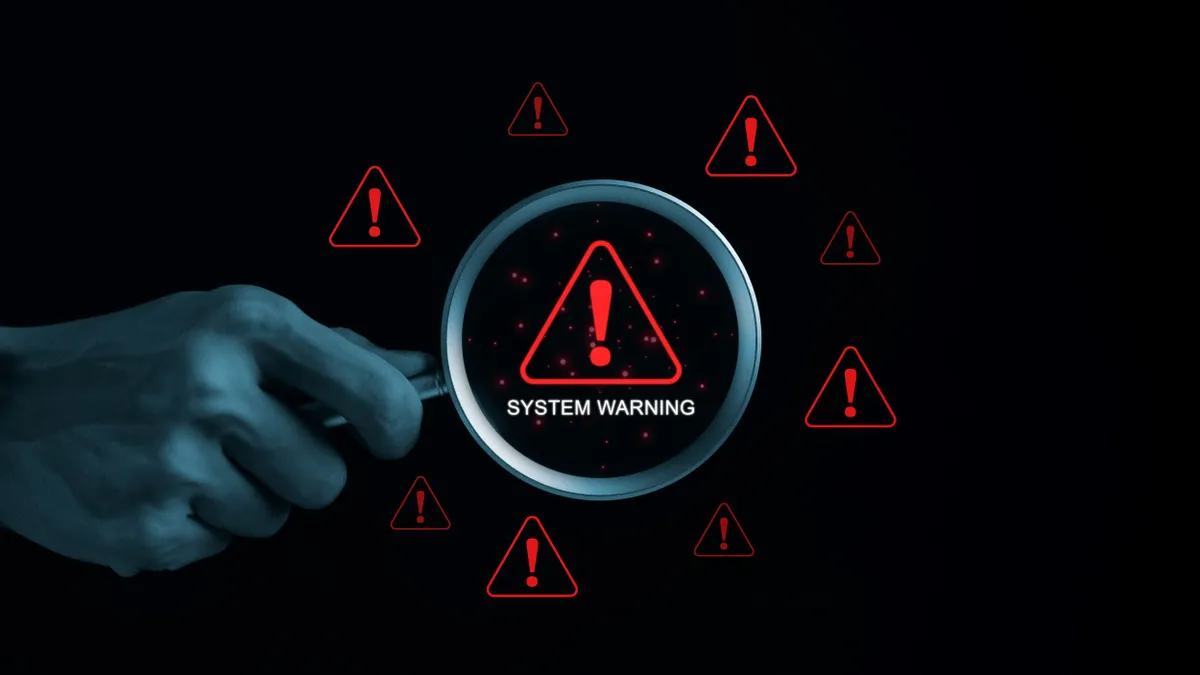

The announcement is of course great news for the AI industry, and for Microsoft. However, look a little beyond the headline figure of 99% accuracy, and it remains unclear just how useful ML models of this type will be.

Although an accuracy level of 99% sounds great, given the scale that Microsoft is working on this still leaves a huge number of bugs that are misidentified: 3,000 a month, in fact.

The academic paper published by the architects of the system gives few details on the number of false negatives it generates, raising fears that critical security flaws could be overlooked if Microsoft comes to rely too much on automated systems instead of the experience of security analysts.

In fact, although it has long been accepted that AI will eliminate some jobs but create others, it's not entirely clear that threat intelligence is a field to which ML models are well suited. Microsoft relied on 20 years of historical data to teach their model what a security threat looks like.

But if the cybersecurity landscape is characterized by anything, it is by novelty and dynamism: ML models require huge amounts of data in order for proper training and datasets of this kind are simply not available for emerging threats.

Even if this ML system works well for Microsoft, its application outside the firm will be difficult. The company is one of the last remaining organizations that is built on a centralized model, where threat intelligence, software development, and operational tasks are all handled in-house.

Today, the majority of software firms are enmeshed in an interconnected web of contractors and subcontractors.

In order to provide useful threat intelligence, ML models will need secure access to data from several (if not dozens) of firms, and all of these connections will need to be encrypted. This encryption added to the computational resources required to run ML systems in the first place will put this kind of system beyond the reach of all but the largest, most integrated firms.

The future

It remains to be seen, of course, how much Microsoft's methodology will be taken up by firms also struggling to manage the number of bugs that are reported in their software.

The system developed by Microsoft — despite its self-reported high success rate — seems destined to remain an interesting but little-used part of the AI ecosystem.

Still, if the methodology gives firms the ability to categorize bugs more easily, this will at least remove some of the pressure on under-resourced and over-worked dev teams. In other words, watch this space.