ChatGPT has entered into a new industry — love — but not without a hitch.

Impressed with the hype around ChatGPT’s launch, OkCupid decided to experiment with OpenAI’s technology to help its teams become more efficient.

But first, it wanted to see what users thought of the language model.

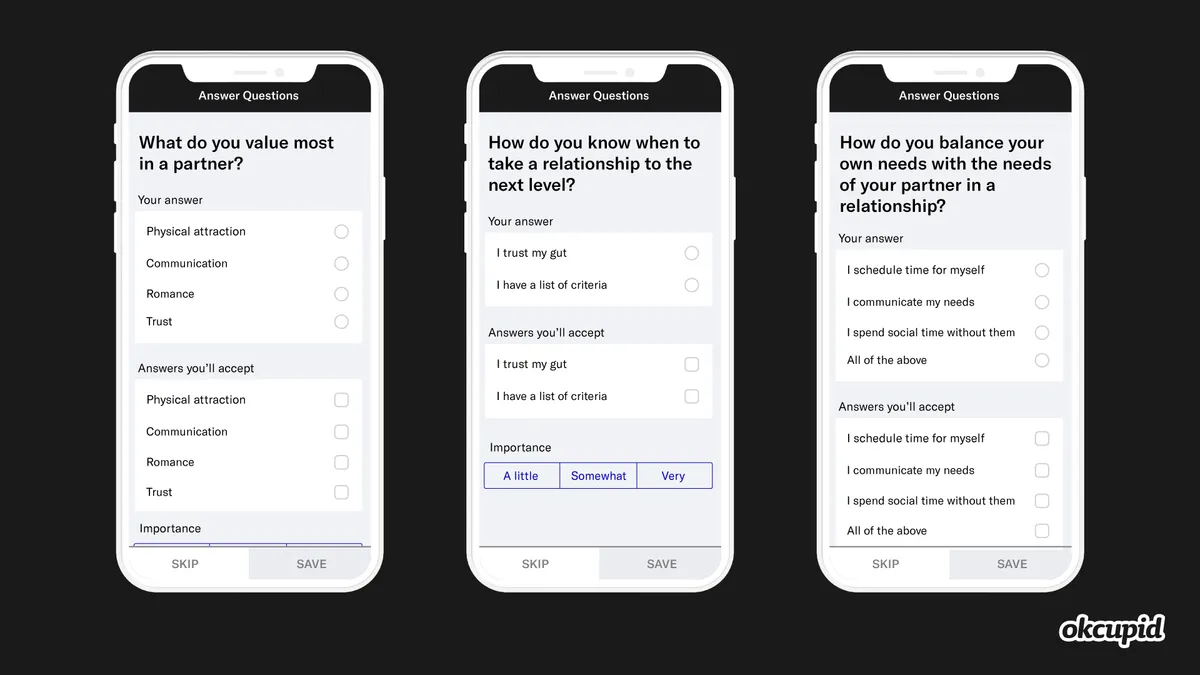

When dating app OkCupid users set up profiles, they fill out all the standard details, including age, sexual orientation and geographic location. Before finishing their profile, users are prompted to answer a minimum of 15 questions ranging from their views on climate change to an ideal date.

These answers power the app’s match-making algorithm, and users are viewed as closer matches if their answers align, according to Michael Kaye, associate director of global communications at OkCupid. Typically, in-app questions are drafted by employees at the company. But the company wanted to see if there was a more efficient way.

OkCupid began asking users what they thought of ChatGPT in January, adding the question in to tee up matches. The question essentially filtered users into two pools: Those who thought ChatGPT was a "life saver" and those who thought it was too "big brother."

Daters who think ChatGPT is a “life saver” got almost 40% more matches on OkCupid than those who think it is “too big brother.” Kaye credits the statistic to the app’s younger dating pool of Gen Z and millennials.

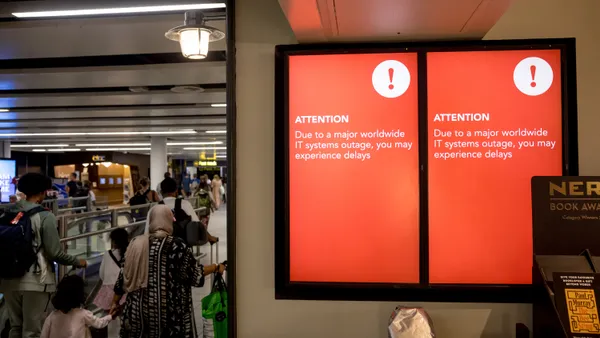

Companies have been racing to unveil the latest AI tool or ChatGPT integration, whether it is saving time on tasks or enhancing search capabilities. But as with all emerging technology, there are concerns about malicious use of tools, from catfishing on dating apps to phishing in the enterprise.

“This is an area that we need more regulation on, because, frankly, things will get out of control really quickly and people utilize them for malicious intent,” said Ari Lightman, professor of digital media and marketing at Carnegie Mellon University’s Heinz College.

Some ChatGPT use cases, like OkCupid's, start small.

After reviewing responses, the company began using ChatGPT to generate questions for its daters using the generative AI technology. Daters answered questions such as:

- Are you more of an introvert or extrovert?

- Are you a morning or night person?

- What’s your favorite way to spend a weekend?

- What do you value most in a partner?

- How do you know when to take a relationship to the next level?

- How do you balance your own needs with the needs of your partner in a relationship?

The ChatGPT-generated in-app matching questions have been answered more than 150,000 times since they were introduced a little over two weeks. Because of the response, OkCupid is now considering incorporating ChatGPT-generated questions monthly.

Dangers of AI in dating

Catfishing is a widespread nuisance for daters on any social platform, and as ChatGPT use cases expand economywide, some users are worried about people using the language model for deception.

More than 7 in 10 OkCupid daters believe using AI to create a profile or message others is considered a violation of trust, according to in-app responses on the dating app.

While Kaye said the company does not support daters on the app using ChatGPT to message others, it is not something that the dating app has had to put a statement out on yet.

“I do want to put a disclaimer out there that I think these AI tools are really helpful when it comes to product features and moderations on our platform, but I don't necessarily see AI playing a more significant role when it comes to fostering relationships, building personal relationships or even romance between people,” Kaye said.

Some use cases can seem harmless to daters wanting to give their profile a competitive edge. Nearly one-third of adults plan to or are already using AI to boost their dating app profile, according to McAfee data published last week.

Some dating platforms have encouraged users to use ChatGPT-generated content. Iris Dating announced last week that it would integrate ChatGPT into its platform, allowing users to generate in-app bios more efficiently.

For dating app users and enterprise leaders, it has become harder to distinguish between AI-generated and human-written content as the technology has evolved.

More than two-thirds of adults were unable to tell the difference between a love letter written by an AI tool versus a human, McAfee found. McAfee CTO Steve Grobman said the chances of receiving machine-generated content are rising as tools such as ChatGPT expand access to anyone with a web browser.

“While some AI use cases may be innocent enough, we know cybercriminals also use AI to scale malicious activity,” Grobman said in a statement. “And with Valentine’s Day around the corner, it’s important to look out for tell-tale signs of malicious activity – like suspicious requests for money or personal information.”

It’s a narrative that IT and cybersecurity leaders are all too familiar with.

More than half of IT professionals predict that ChatGPT will be used in a successful cyberattack within the year, according to a BlackBerry survey of 1,500 IT decision makers across North America, the U.K. and Australia.

Catfishing on dating platforms and the phishing that goes on in the enterprise rely on deception and human error, and as technology evolves, threat actors will continue to use the newest tools to scale their attacks.