As the race to deploy enterprise-grade generative AI moves forward, the three hyperscalers — AWS, Microsoft and Google — are providing glimpses of how large language models and the tools they enable are reshaping infrastructure.

Running generative AI at scale will require massive data storage and compute power on top of the infrastructure, platform, data and software services already supported by cloud.

While excess capacity is built into the cloud model, the added volume will put stress on current infrastructure that the hyperscalers are already moving to relieve.

Alphabet is building out Google Cloud data centers and redistributing workloads to accommodate AI compute, the company’s CEO Sundar Pichai said during a Q1 2023 earnings call last month.

Microsoft is focusing on optimizing Azure infrastructure to achieve the same goal, CEO Satya Nadella said during the company’s March earnings call.

The question of resources is, however, top of mind. “The accelerated compute is what gets used to drive AI," Nadella said. "And the thing that we are very, very focused on is to make sure that we get very efficient in the usage of those resources.”

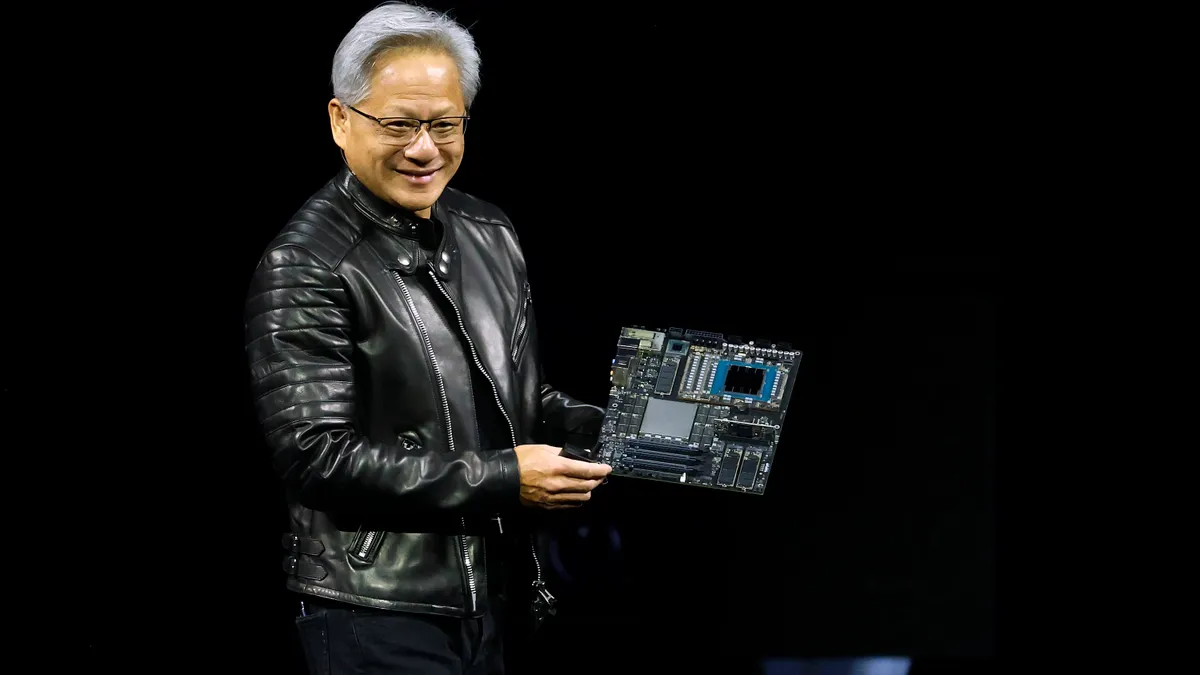

AWS unveiled the next generation of Inferentia2 and Trainium for SageMaker last week. The new chips address the need for more powerful, efficient and cost-effective cloud compute hardware to handle ML inference and model building loads, according to Amazon.

Amazon CEO Andy Jassy touted AI chip technology advances in a Q1 2023 earnings call last month.

“The bottom layer here is that all of the large language models are going to run on compute,” Jassy said. “And the key to that compute is going to be the chip that’s in that compute."

“To date,” Jassy said. “I think a lot of the chips there, particularly GPUs, which are optimized for this type of workload, they’re expensive and they’re scarce. It’s hard to find enough capacity.”

Processing the data has to be quick and requires highly scalable compute abilities, said Sid Nag, VP analyst at Gartner.

“If everybody starts building new applications that use generative AI tools rather than traditional SQL, you can just imagine what kind of load it is going to put on cloud infrastructure,” Nag said.

Compute drives cost

The hyperscalers will have to prioritize efficiency to make the technology affordable on top of adding capacity.

Already, costs are a concern. OpenAI’s CEO Sam Altman described the compute bills for ChatGPT as “eye-watering” in a December tweet.

A single training run for a typical GPT-3 model could cost as much as $5 million, according to a recent National Bureau of Economic Research working paper.

New Street Research estimated the infrastructure price tag for adding ChatGPT features to Microsoft’s Bing search engine could reach $4 billion, according to a CNBC report. Currently, Bing has less than 3% of the global market for search.

A shift from raw central processors to more specialized forms of computing powered by graphics processing units, Google’s AI-accelerating tensor processing units and a new generation of NVIDIA products, creates a new cost dynamic, said Scott Young, director of infrastructure research at Info-Tech Research Group.

“This mix has and will continue to drive the need to increase space to house this compute or properly balance existing space utilization based on customer and internal hyperscaler demand,” Young said.

The challenge is a business opportunity.

The platform size needed to support generative AI functions isn’t a feasible in-house option for most enterprises, according to Young.

“With most enterprises turning to cloud to provide the specialized platforms and raw power needed, it becomes a new opportunity for hyperscalers to increase market share,” Young said.

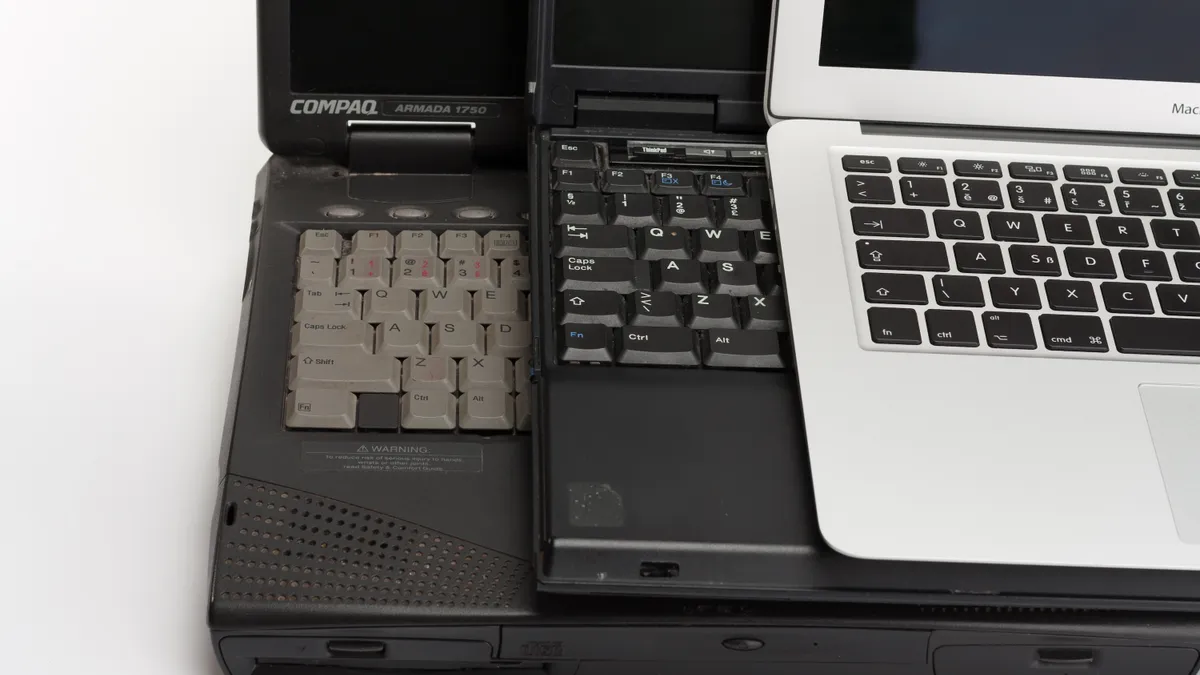

Investments in chips, servers and other infrastructure to support AI advances are not new, according to Nag.

Moore’s Law, which postulates that the number of transistors in a chip and thus its compute power doubles every two years, remains top of mind for the hyperscalers.

“Before the AI wave, Google and the others were trying to drive down the cost of their data centers, making them more efficient and more sustainable with better silicon technology,” Nag said.

Training better models and identifying enterprise use cases are the current industry focus. That will give way to fine-tuning the technology to meet specific needs.

“The reality, by the way, is that most people are spending most of their time and money on the training,” Jassy said. “But as these models graduate to production, where they’re in the apps, all the spend is going to be in inference.”