Businesses have had to rethink how teams mitigate risks as generative AI becomes a more common addition to enterprise tech stacks. Technology leaders have underlined the use of workers to validate generated outputs and frameworks to inform proactive guardrails.

“The risks are real,” Avivah Litan, distinguished VP analyst at Gartner, said during the firm’s IT Symposium/Xpo in October. But mitigating the threats comes with a price, she said.

By 2026, Gartner expects generative AI to cause an enterprise spending spike of more than 15% due to the amount of resources needed to secure it, from access management to governance enforcement.

CIOs who have poured resources into the technology grapple with risk mitigation, unclear ROI and ballooning costs. Solutions that instill trust, monitor reliability and beef up security within AI systems are proliferating as vendors step in to meet rising demand.

Though adding these providers to the swelling enterprise vendor roster can drive up costs, Gartner expects the investment will pay off.

Without guardrails, organizations can leak data through the hosted large language model environment, or providers can repurpose and reuse the information. Hallucinations or generated information from copyrighted works can open the business to reputational harm or legal disputes. Prompt injection, API and vector database attacks are also considerable concerns.

Multiple vendors address each risk category. For content anomaly detection and runtime defense, organizations can turn to Holistic AI, Aim Security and Cisco’s Robust Intelligence, among other vendors and platforms, Litan said. AI governance vendors include IBM watsonx, Cloudera and Casper Labs, which is now known as Prove AI.

“This is going to cost money,” Litan said. “But if you do this, we do think you’re going to get benefits.”

Enterprises that invest in tools for AI privacy, security and risk are expected to achieve 35% more revenue growth than those that hold out, according to Gartner data. Invested organizations also report higher regulatory compliance and cost optimization.

Strengthening governance

In addition to deploying secure practices in-house, technology leaders can leverage outside services to strengthen governance practices as adoption ramps up.

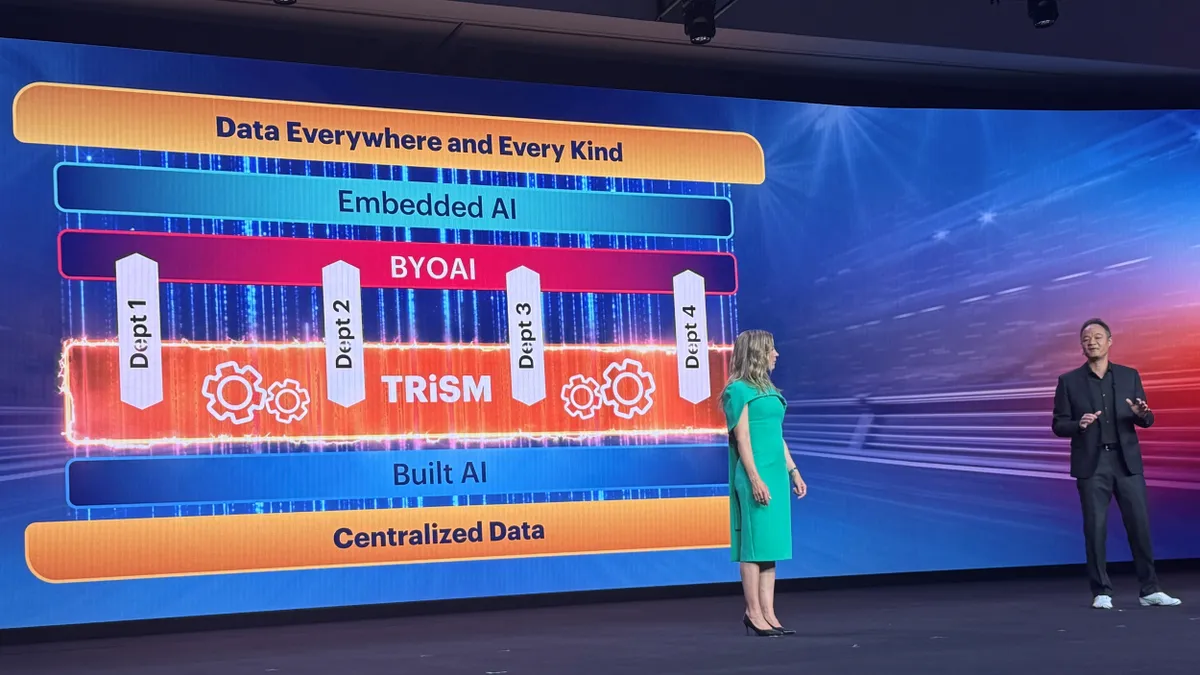

“You need a way to programmatically enforce at scale and at speed your practices, and you can’t just use humans to do this,” said Hung LeHong, distinguished VP analyst and Gartner Fellow, during the conference’s keynote session. “You can’t exclusively use governance practices to ensure trust; you will have to use technology.”

Generative AI is already the most common artificial intelligence technique in the enterprise, according to Gartner data. Though, most are still figuring out how to govern the technology. Building trust in the tools and services by deploying enforceable best practices is vital to protecting the business from outsized risks.

“We’re pointing teenager technologies at grown-up problems,” Mary Mesaglio, distinguished VP analyst at Gartner, said during the event. “Trust is a currency that we undervalue.”

Adding security and governance-enhancing tools can ease enterprise concerns, but due diligence is still critical.

“You can be sure, whenever there’s problems, there’s entrepreneurs that want to make money solving that problem,” Litan said. “You have to continually validate your third-party software [and] your own applications. You have to continually validate they’re doing what they say they’re doing.”

CIOs should think through budgeting requirements for AI accountability, revisit data classifications and permissions and evaluate where additional services to strengthen governance are needed.

“You still need all your existing risk management measures, layered security, endpoint security, network security, but now you need specific measures focused on the model and on the data,” Litan said.

Clarification: This article has been updated to reflect Casper Labs is now known as Prove AI.