When Nam Tran decided to fiddle around with AI, he looked for what he called "low hanging fruit."

Tran, professor of clinical pathology at University of California, Davis, and his former UC, Davis colleague Dr. Hooman Rashidi, landed on predicting which burn patients were at risk for acute kidney injury.

University of California, Davis has the largest burn center in Northern California, so they knew they had the right data, and the disease itself is "defined reasonably well," he said.

Their research followed the high-profile failure of the University of Texas M.D. Anderson Cancer Center to use IBM Watson's AI to "eradicate cancer." The project, announced in 2013 and later put on hold, cost the university more than $62 million in three years, according to a Forbes report on a lengthy audit from the University of Texas system.

The outcomes with Watson called into question project scope.

Instead of trying to feed every single piece of information about a disease into AI, as M.D. Anderson had tried, Tran and Rashidi, the latter now a member of Cleveland Clinic's Pathology and Laboratory Medicine Institute, figured they'd only need a handful of biomarkers to make this kind of prediction work. They were right, and published their first paper on the AI's success in 2019.

The lesson for enterprise leaders is that the key to making AI work is to find appropriate use cases. These may not seem like big or splashy problems to fix — like curing cancer — but rather a small yet important challenge that can make a big difference when helped by AI.

In 2020, Tran and Rashidi founded the company MILO to sell their AI software. By 2021, they showed that MILO, combined with point of care testing, could quickly and accurately predict acute kidney injury in military burn-trauma care.

MILO is part of a quickly accelerating AI movement. The AI software market is expected to reach $62.5 billion this year, up 21.3% from 2021, according to a recent Gartner forecast. Gartner predicts the top five use cases will be knowledge management, virtual assistants, autonomous vehicles, digital workplace and crowdsourced data.

Still, despite interest and investment, nearly half of AI pilots fail. In a 2021 survey, Gartner found that only 53% of AI prototypes make it to production.

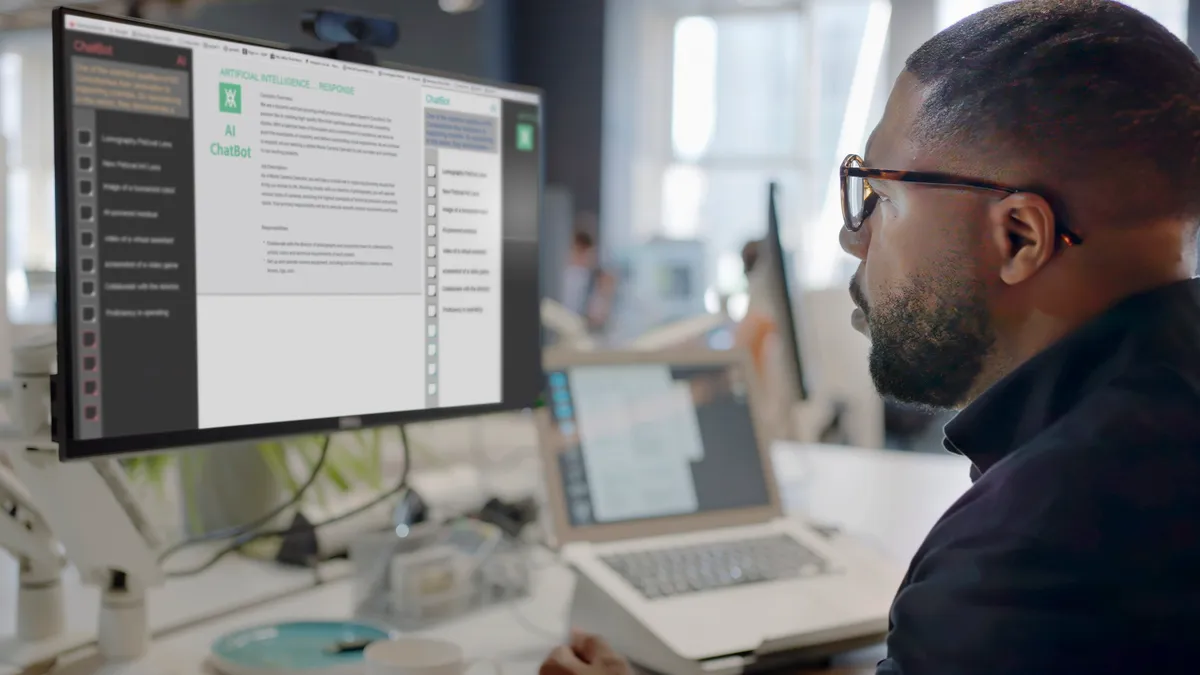

"People think of AI generally as being a human intelligence," said Whit Andrews, distinguished VP analyst at Gartner. "AI is much more like a fairly limited living organism that has a very narrow set of tasks that they follow."

Determining problems ripe for AI solutions

While interest in AI is high, companies still struggle to match current abilities with business problems.

Most CIOs understand the technology aspects of AI, but struggle with "the application of the technology to solve a business problem," said Scott Craig, VP of product management at Hyland. Figuring out which areas of the business are most primed for change "tends to be where the conversations get stuck."

Craig has his clients start with simple things by asking where a business is still running on spreadsheets, where people are still doing data input and manually interacting with data.

These might seem like low-stakes processes, but they are also more likely to have "quick ROI and are a better place to start" with the help of AI, he said.

Hyland recently worked with a California healthcare system, deploying its Intelligent MedRecords solution to scan paper medical records like admissions forms and insurance cards — some that still come in via fax — and automatically index them.

"This was a process that before, someone would manually sit at a scanner and scan them," he said.

The AI solution reduced the healthcare system's document volume by 80%. The other 20% are still processed manually.

"It wasn't over-engineered but it solved a real business problem with immediate financial payback," Craig said.

How to prime a pilot for success

Other than making sure an enterprise picks an appropriate problem to solve, finding the right vendor is critical — unless the plan is to build it in house.

To start, CIOs should ask vendors for their definition of AI, Andrews said. "The vendor should be able to write it out for you. If they can't, they can't possibly be selling something with AI," he said.

Craig recommends asking for customer references, similar use cases, and for the ROI on those use cases. "There are incredibly powerful platforms that have less than a 30% success rate once they've been implemented," he said.

AI models also need the right information for them to work.

"If it's the wrong data, it gives you bad answers," said Andrews. That means cleaning data, but also not trying to use data that's meant for another purpose. "People have data they use for analytics, but it's harder to be able to use data for action," said Andrews.

Inappropriate data is one reason why the MD Anderson/IBM Watson project failed, said Tran. "They thought they could throw all the cancer in the university" into the model, and it would come up with an answer to cancer.

For an AI pilot to become an adopted project, it must be monitored and checked through that pilot phase. That can avoid embarrassing outcomes like Microsoft's chatbot turning racist less than 24 hours after its 2016 launch, or an error or bias in medical data becoming entrenched across the platform, as one analysis of medical studies found.

AI also needs to be viewed as a long-term project, not just a one-off.

Some enterprises "view these AI projects as a one-time spend and you really need to view the lifecycle of that project, so you need to think about 'how am I going to maintain this and continue to refine and adapt,'" Craig said.