By: Matt Ashare

• Published June 10, 2025

The banking industry was quick to recognize the business potential of generative AI and, on the flip side, appreciate the perils inherent in reckless adoption. Adept at managing risk, the sector’s largest institutions took a cautious yet persistent approach moving pilots into production.

Adoption has picked up momentum over the last year, according to Evident Insights, which tracks 50 of the largest banks in North America, Europe and Asia. The 50 banks announced 266 AI use cases, up from 167 in February, Colin Gilbert, VP of intelligence at Evident said during a virtual roundtable hosted by the industry analyst firm.

“The vast majority, or about 75%, are still internal or employee facing,” he said, adding that the distribution between generative AI and traditional predictive AI use cases was split roughly 50/50.

As banking integrates the technology into daily operations and models mature, the mix is shifting toward generative AI capabilities with customer-facing features, Mudit Gupta, partner and Americas financial services consulting practice AI lead at EY, said during the panel.

“You tend to start with productivity because it’s low risk,” Gupta said. “You establish proof points so that when you get further down the road of adoption, you can move on to transformation.”

Technology executives from three global banks each put their own spin on Gupta’s formulation.

“We are taking incremental steps to do something exponential,” Rohit Dhawan, director of AI and advanced analytics at Lloyds Banking Group, said. The bank is consolidating its AI efforts to move beyond individual use cases after bolstering its cloud-based data strategy earlier this year with Oracle’s Azure-based database system and Exadata customer cloud data system.

“It’s a very different mindset where you go from thinking about how to infuse or optimize a process with AI to fundamentally reimagining the process with AI,” Dhawan said.

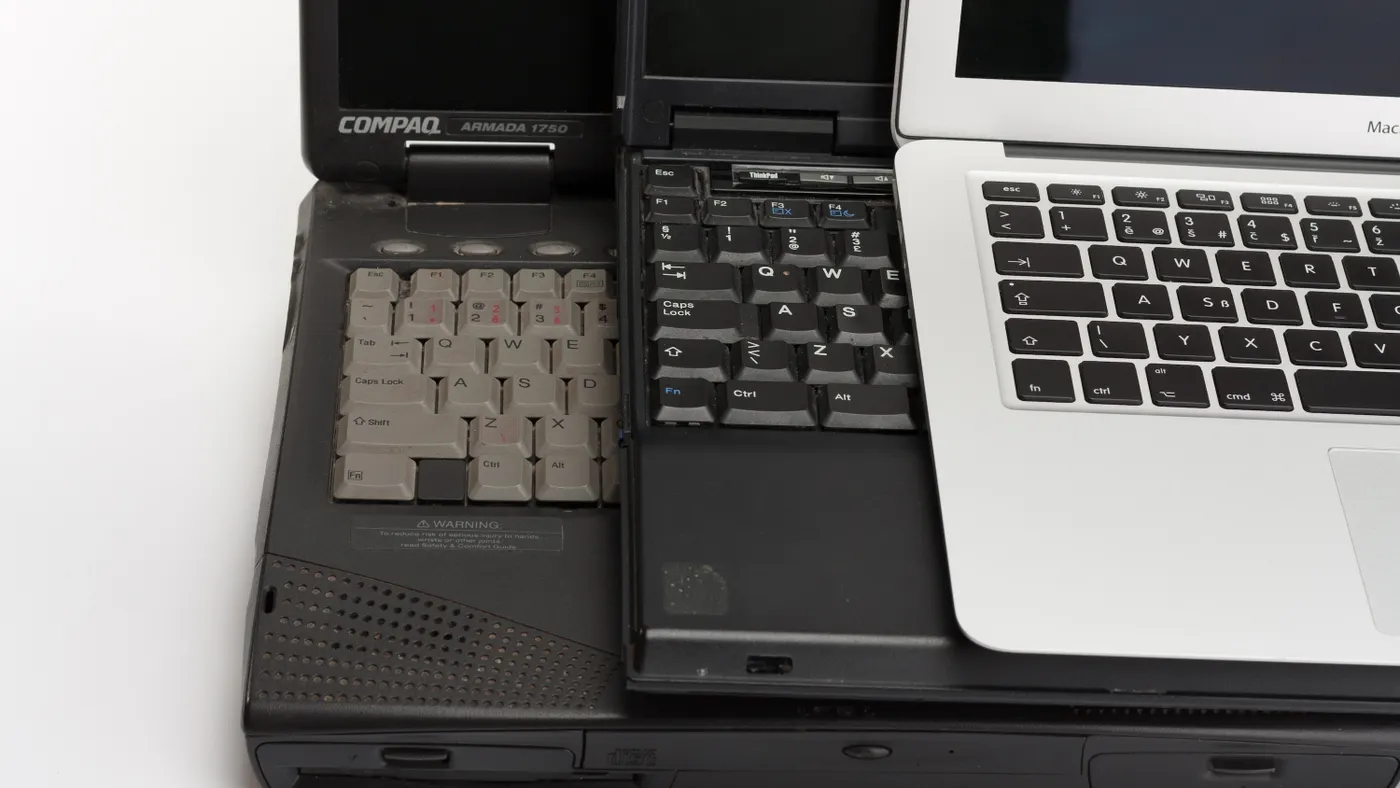

Generative AI use cases abound in banking. The technology has capabilities that reach across processes, from managing vast quantities of customer and compliance data for associates to assisting engineers in refactoring legacy applications.

Banking executives expect generative AI to be capable of handling up to 40% of daily tasks by the end of the year, according to an April KPMG report. Nearly 3 in 5 of the 200 U.S. bank executives surveyed by the firm said the technology is integral to their long-term innovation plans.

AI acceleration

Until recently, NatWest Group was moving gradually with AI, measuring return on investment one use case at a time, the bank’s Chief Data and Analytics Officer Zachery Anderson said during the panel. “We’ve made a pretty big shift in the last eight months to start to reimagine pieces that really looked at customer experiences, in particular, and how we might rebuild those entirely from front to back,” he said.

While AI assistants, such as Bank of America’s Erica for Employees and Citi’s Stylus document intelligence and Assist virtual assistant, are becoming commonplace, the breadth of the technology’s capabilities is expanding as deployments increase. In September, JPMorgan Chase announced it would equip 140,000 employees with its LLM Suite AI assistant.

“Generative AI is going to impact every function within a bank — every single part of the job,” Accenture Global Banking Lead and Senior Managing Director Michael Abbott told CIO Dive in January, as automated agentic tools began to dominate the AI space.

NatWest is leveraging two deployment pathways, Anderson said. “We have a core set of data scientists, data engineers, that are working on the biggest, most difficult use cases,” he said. “They're working on things that are at the edge of feasibility right now, because usually the models are improving so quickly, but by the time the project's done, what was at the edge before is now in the core of possibility.”

At the same time, the bank is pushing AI into non-technical functions. In addition to giving the tools to developers, NatWest rolled out an internal AI tool to business users and a “very large portion of the users in the bank,” said Anderson.

“The feasible edge of what you can do with the models and the agents right now is not only increasing, but it's also jagged,” Anderson said. “Things you think you can do, you can't do, and things that you end up finding out that you can do surprise you sometimes … with all 70,000 of our employees exploring that edge, we're mapping out the frontier in a much faster way than we were before.”

Truist has moved from quick wins to use cases that reach farther up the banking food chain. “Extracting knowledge is the most popular use case,” Chandra Kapireddy, head of analytics, AI/ML and Gen AI at Truist, said. “It’s really low risk, the data is already out there, and it’s high reward [because] it gets answers pretty quickly.”

Answers communicate value to business users and help sustain momentum as AI use cases grow in complexity and cost. Early wins also provide IT executives with the political capital to engage in some necessary experimentation with the technology.

“If you try and aim for perfection, you're going to be spinning your wheels,” Kapireddy said. “That's going to be very productive at the beginning of your use case life cycle. But as you start investing dollars in it, you have to make sure that business stakeholders know that it's going to have an impact.”

Article top image credit: Leon Neal / Staff via Getty Images